Inside Meta’s ‘Cocoon’ Strategy

Everyone’s watching model releases and benchmark charts. Meanwhile, the interface is thickening - and Meta is collapsing the gap between your intent and its consequence.

This story isn’t about one specific project or product. It’s about the bigger picture. It’s easy to think any AI-Mediated ‘Cocoon’ future is still a long way off. But read on and you may be surprised how much progress has already been made.

Meta is a particularly good example because the distribution channel is already there: ~3.54 billion daily users across its Family of Apps. And the product that turns AI-Mediation into something you can wear has already shipped in the millions - more than two million pairs of Ray-Ban Meta smart glasses sold since the current generation launched in late 2023.

But these are just numbers to set the scene. If you want to understand Meta’s full strategy, you don’t start with the models. You need to step back and look at the loop.

The rest of this decade seems likely to be the decisive period for determining the path this technology will take, and whether superintelligence will be a tool for personal empowerment or a force focused on replacing large swaths of society.

- Mark Zuckerberg

And it’s worth noting that Meta’s leadership is now unusually explicit about the direction of travel - personal superintelligence as a daily companion, with glasses as the kind of device that can actually keep up because they see what you see, hear what you hear, and can stay with you all day. That’s not a roadmap - it’s a statement of intent.

The rest of this Briefing looks at the mechanism.

Seeing the shape, not just a product feed

There’s a particular kind of mistake we make when we look at the AI era through the lens of “what shipped this week”. We end up treating the world like a product feed - model releases over here, partnership deals over there, a new device announcement on the side. Lots of motion, not much shape. But shape is the real story.

The interface is thickening, and Meta’s strategy is to tighten the loop inside it.

For a company like Meta, the strategic unit isn’t a model, a feature, or even just one product. It’s an enclosure mechanism - a way of tightening the distance between what you intend and what you experience, until that distance starts to feel like it was never there.

That’s the Cocoon move.

Not in the sci-fi sense. Not “when we all live in headsets”. But in the much more tangible (and therefore much more important) sense - an AI-Mediated layer that becomes the default interface through which reality is perceived, interpreted, and acted upon.

If you want one crisp definition to carry through the rest of this post:

Loop tightening = fewer seconds + fewer steps between intent and consequence.

Once you hold that, most of Meta’s initiatives stop looking like unrelated projects and start looking like a single strategy.

Immersion is NOT just spatial

People hear “immersive” and picture VR.

But the TrustIndex definition of immersion has never been about physical space. It’s about AI-Mediation.

If your view of the world arrives through a feed that’s filtered by a social graph, ranked by an algorithm, and increasingly synthesised by AI, then you are already living inside a thick interface layer. You’re already immersed - but most people just don’t think of it that way.

That’s why this next phase is so easy to miss. It doesn’t arrive as a singular event. It arrives as continuity. We move from a place you visit to a layer you inhabit.

And once you see that, you can see more clearly what Meta is doing - not chasing intelligence as a trophy, but building an architecture that makes the AI-Mediated layer feel coherent, useful, and natural enough that you stop noticing it.

The loop that Meta is tightening

Here’s the loop in its simplest form.

You imagine something. You express it. The system generates an output. You edit. You iterate. You share, deploy, buy, publish, message, decide. The world responds. The loop tightens.

If that sounds abstract, it’s worth noticing how often Meta now ships features that focus on making “imagine → generate” feel less like using a tool and more like thinking out loud.

When Meta launched its Meta AI assistant (built on Llama 3), it didn’t only ship another chat interface. It shipped an “Imagine” mode designed to generate images as you type, updating continuously with every few characters - a deliberate reduction in seconds and steps between intention and output.

That’s not a party trick. It’s a clue.

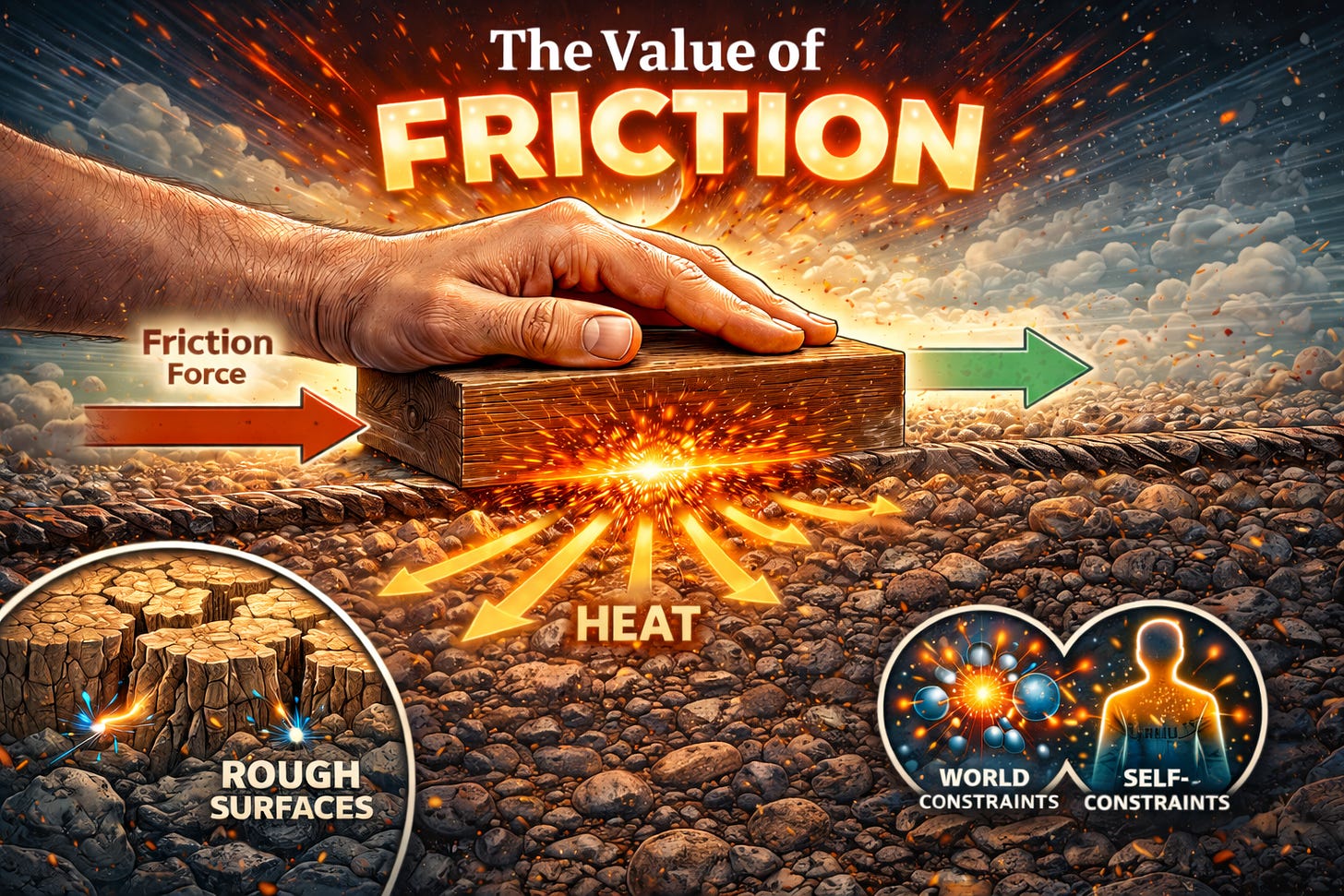

The strategic question becomes - how many seconds sit between intention and experience? How many steps? How much friction?

Because friction is the governor.

Not friction inside the system. Friction between you and what you’re trying to do. Between you and what you’re seeing. Between you and what you’re about to become.

Lower that friction, and a set of pressures rises automatically.

That’s where the TrustIndex lens helps. It provides a map of where pressure accumulates when AI-Mediation becomes the default interface.

The loop becomes a flywheel

As the loop tightens, it starts to compound.

A smoother loop makes the AI-Mediated layer more attractive. More adoption gives the system more behavioural data and more social context. That context improves personalisation. Better personalisation makes the layer feel more coherent. Coherence reduces perceived friction. Reduced friction tightens the loop further.

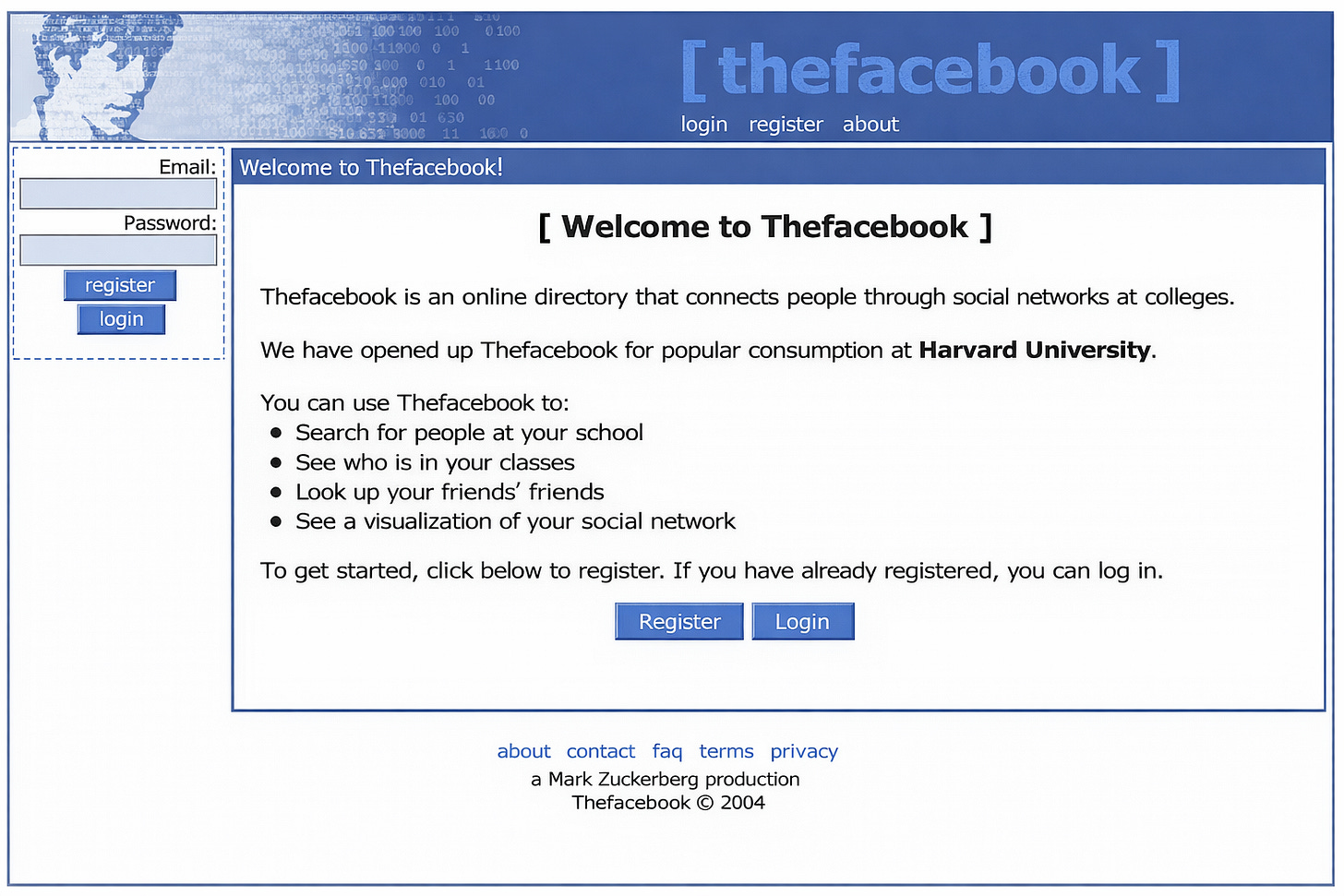

That flywheel isn’t theoretical. We’ve already seen an earlier version of it in the social media era. The feed was a “primitive Cocoon” - not immersive in the spatial sense, but immersive in the cognitive sense. It shaped attention, identity, and politics by becoming the default interface for a huge fraction of social reality.

Now take that same dynamic and move it closer to perception, closer to language, closer to intent - and closer to action.

That’s what “AI-Mediation” actually means.

Five frictions Meta is working to remove

If you take the loop seriously, you can predict the kinds of investments a serious player will make - not because you’ve memorised their roadmap, but because you understand what the loop demands.

One useful sanity check - look at the consumer surfaces Meta is choosing to ship.

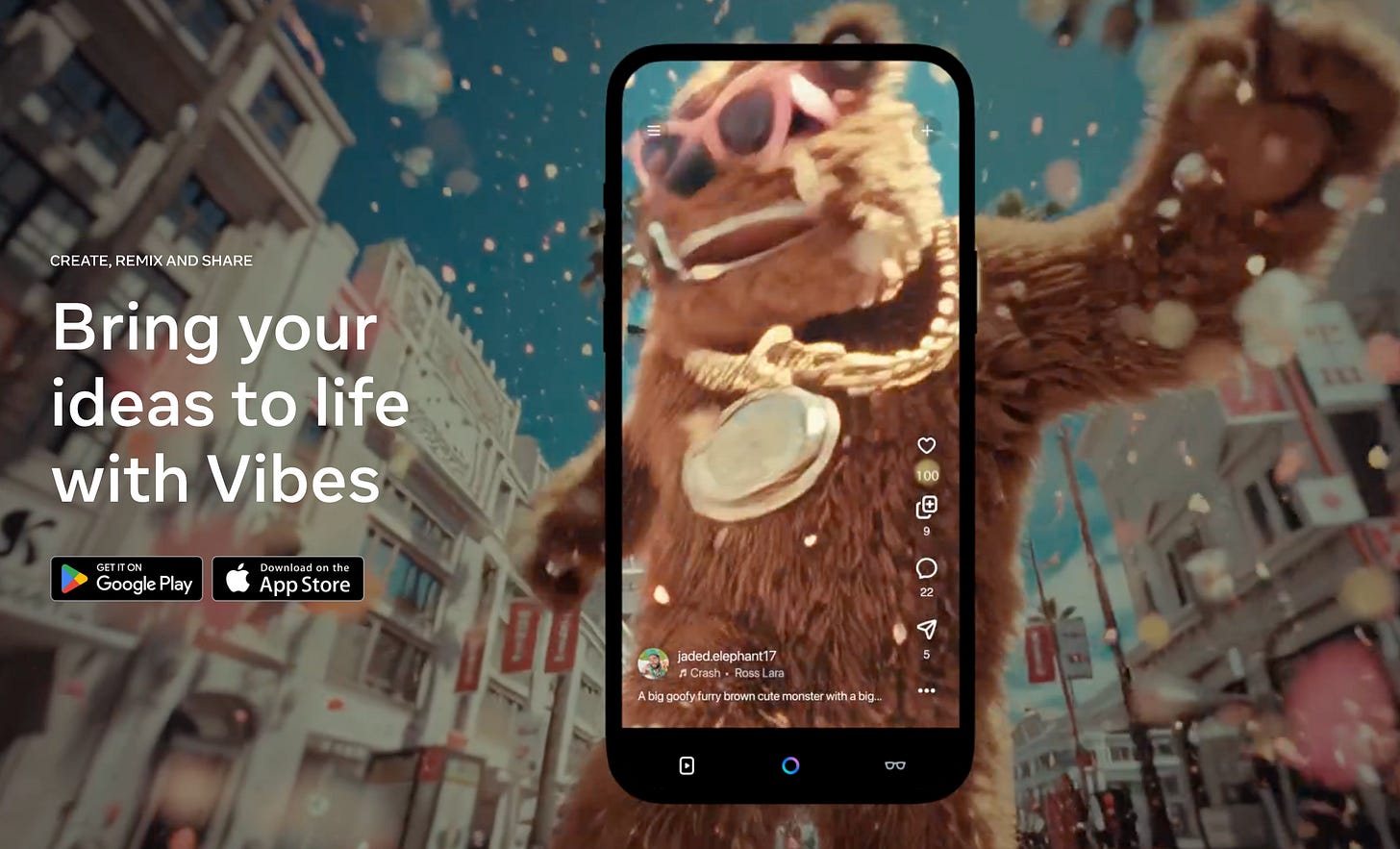

The Meta AI app isn’t just “another assistant”. It’s becoming a control plane - the place you manage your assistant, your creations, and increasingly your glasses.

And Vibes (Meta’s short-form AI video feed) is not just a gallery of demos. It’s a behavioural loop: watch → get inspired → remix → share → watch again. A creation engine disguised as a feed. That’s how the Cocoon arrives - continuity, not ceremony.

1) Creation friction

To tighten the loop, you can’t just generate outputs. You have to generate workable material.

A single image is a dead end. A one-shot prompt is fragile. A clever demo is not a loop.

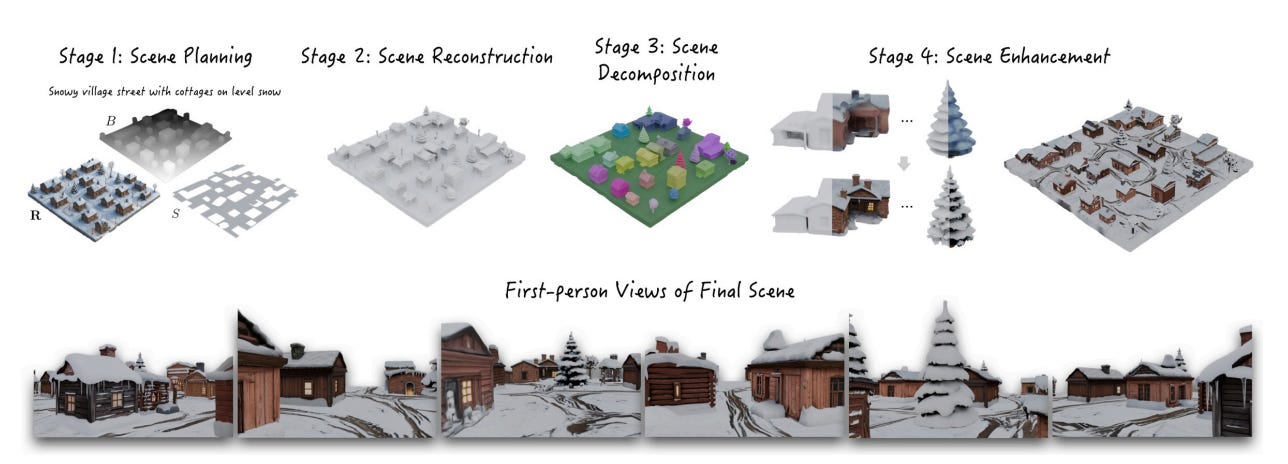

A loop requires a substrate that can be edited, recomposed, navigated, and iterated on without collapsing into chaos. It requires worlds that have structure. Objects that can be decomposed. Environments that can be modified without the whole scene tearing.

This is why Meta’s generative work is drifting toward building a place, not just rendering a view.

Their WorldGen research is revealing because it encodes the loop as a pipeline rather than a prompt. Planning and blockout. Navigable layout. Reconstruction that yields real geometry compatible with existing engines and tools. Decomposition so objects don’t fuse into one irreducible blob. Refinement so the environment can survive scrutiny without blowing out performance.

That sequence matters because it tells you what Meta is optimising for - not the wow moment, but the capacity to keep the world stable under change.

Alongside that you see the “physics” thread - V-JEPA 2, trained on video, designed to predict how a scene evolves over time at the level of concepts rather than pixels.

The point isn’t cinematic output. It’s object permanence, causal continuity, and the ability to plan. A Cocoon that can’t keep track of what still exists when it goes out of view isn’t an environment - it’s a fragile illusion.

The perception substrate (the part people miss)

There’s another layer underneath “world generation” - the models that turn reality into something that can be handled.

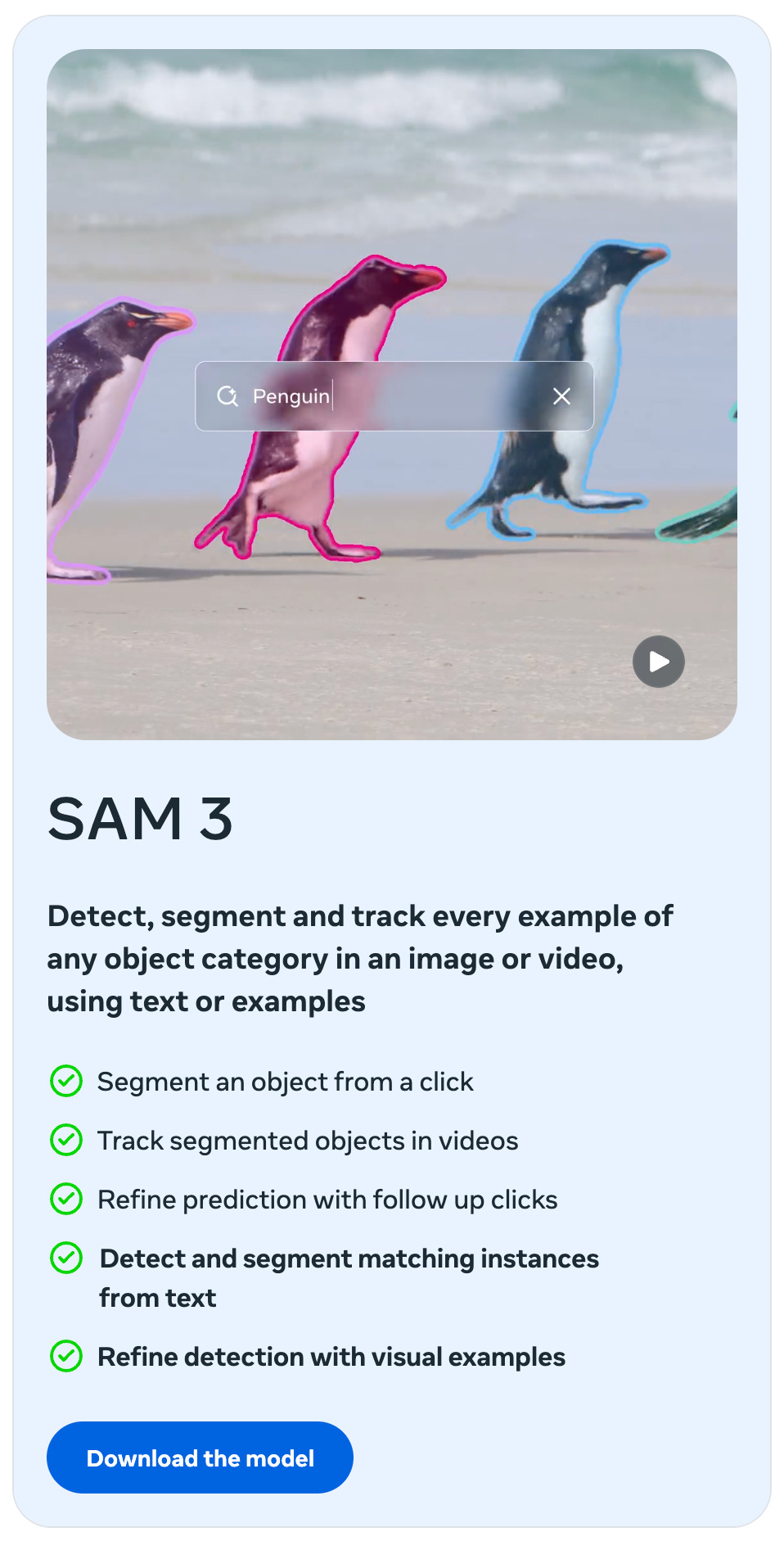

Segment Anything (and its newer variants) is a good example. If you can reliably pick out “the thing” from “the scene” (in images, in 3D, and even in audio) then the world becomes editable. Not just generate-able.

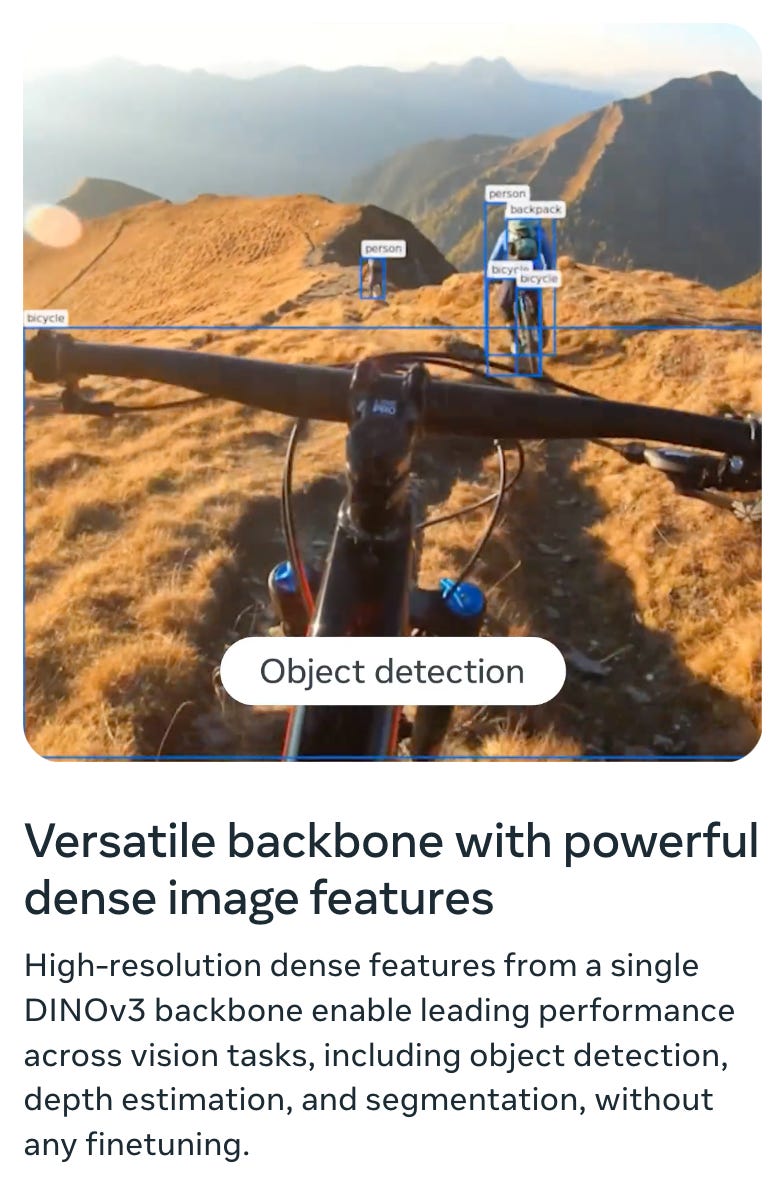

DINOv3 belongs in the same family. It’s not a flashy demo model. It’s a representation backbone. The kind of thing you build on when you want stable features, stable objects, stable handles.

These aren’t side quests. They’re the primitives that make an AI-Mediated layer feel like an environment rather than a slideshow.

TrustIndex pressure: as creation friction drops, Fidelity pressure rises. Generated output stops reading as “content” and starts reading as part of your lived environment.

2) Comprehension friction

Once you can generate, you need to mediate.

This is the move most people still underestimate - translation, summarisation, conversational grounding, and context-sensitive assistance. Not as discrete tools, but as a continuous membrane between you and what’s happening.

Meta has been unusually explicit here too. SeamlessM4T was built as a unified multimodal translation system (speech-to-speech, speech-to-text, text-to-speech, text-to-text across close to a hundred languages) with an architecture designed to reduce latency and error compounding.

The reason is structural. The Cocoon can’t become a social habitat if communication is brittle. But the more revealing work is where translation stops being lexical and becomes behavioural.

SeamlessExpressive aims to preserve prosody and voice style across languages (whisper stays whisper, intensity stays intensity) because humans don’t bond to correct subtitles, they bond to tone and timing.

Then the Seamless Interaction Dataset extends the idea further - thousands of hours of dyadic, face-to-face interactions, with prompts designed using the interpersonal circumplex framework, and annotations for turn-taking and non-verbal dynamics.

It’s training for what you might call social physics - the micro-patterns that make conversation feel natural rather than merely technically accurate.

Even the “safety” dimension becomes part of the mediation layer. Meta has described strategies to reduce hallucinated toxicity in translation - a kind of real-time sanitisation of what passes through the membrane.

That’s a lot of effort to spend on what most people still file under “nice-to-have translation”. But it makes perfect sense if you see the loop: as mediation becomes the default interface, you either keep the user socially competent inside it, or they’ll feel the layer as a handicap.

TrustIndex pressure: comprehension friction collapsing raises Reality pressure (interpretation itself is increasingly outsourced) and intensifies Transparency pressure under attention constraints.

You can’t ship infinite explanation into finite attention.

Narrative observability becomes the compression layer - the shape your mind can actually hold.

That’s powerful. It can be a guardrail. And it can also be an exploit surface.

3) Access friction

If you want continuity, you need proximity. The loop can’t tighten if the interface lives behind a ritual.

Open laptop. Find a tab. Log in. Summon tool. Translate thought into query. Wait.

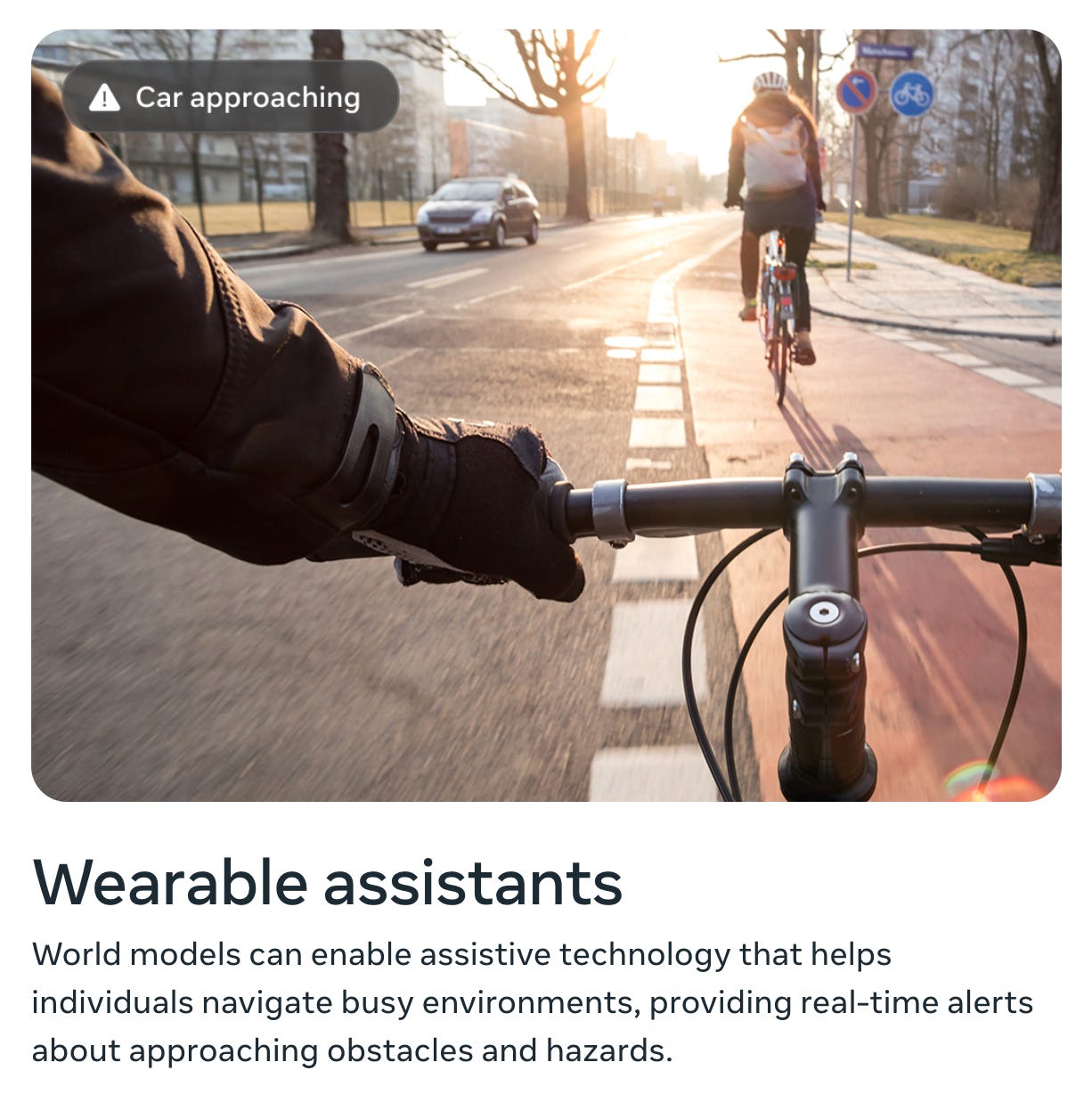

The loop tightens when the interface becomes closer than the ritual. This is why the “thin Cocoon” matters. Not because everyone wants to cosplay the future, but because glasses are a mainstream form factor. They can be worn without signalling. They can be present without demanding presence.

Ray-Ban Meta is revealing precisely because it’s ordinary. The product is recognisably consumer tech - camera capture, open-ear audio, calls, music, and a voice assistant. The important step is that you can invoke the layer without reaching for anything.

Then Meta pushes the category forward with Ray-Ban Meta Display - not a full headset, but an in-lens overlay. Glanceable cards. Teleprompter prompts. And the headline feature - live translation visually rendered in the lens. A subtitle layer for reality, literally.

And now we’re seeing category expansion - Oakley Meta Glasses are the same basic thesis expressed in a performance form factor. Not “one novelty product”, but a portfolio.

This is where the numbers matter, because they collapse that “someday in the future” reflex you might have had.

Meta reported ~3.54 billion daily people across its Family of Apps in September 2025. That’s a global population already inside an AI-Mediated layer, every day.

On the hardware side, EssilorLuxottica has said more than two million pairs of Ray-Ban Meta glasses have sold since the late-2023 launch, and has talked about scaling production toward 10 million units a year by the end of 2026 - with reporting suggesting discussions to go higher if demand holds.

And in January 2026, Meta paused international expansion of the Ray-Ban Meta Display rollout due to “unprecedented demand and limited supply”, prioritising the U.S. while waitlists stretch well into 2026.

That isn’t a niche experiment. That’s an interface distribution problem being solved.

TrustIndex pressure: as access friction drops, Reality pressure rises (the layer becomes default), and Portability comes under downward pressure as continuity becomes a switching cost.

4) Command friction

A loop isn’t only about what you see. It’s also about what you can do.

The fastest way to tighten the loop is to move upstream of language. Typing is slow. Menus are slow. Even speaking is slow in the places where speaking is socially awkward. So Meta has spent serious effort on “intent capture” - the Meta Neural Band, built around surface EMG, reading muscle activation at the point where intention becomes movement.

Micro-gestures become input. A thumb twitch becomes navigation. A subtle pinch becomes selection. In Meta’s own framing, it’s an invisible controller. And the more revealing part is the ecosystem move.

At CES 2026, Meta and Garmin demonstrated a proof-of-concept that lets drivers and passengers control an in-vehicle interface through the Neural Band.

That matters because it suggests Meta doesn’t only want an input device for its own walled garden. It wants a general-purpose input layer for ambient computing - a way for “intention → action” to become a portable interaction primitive.

This is where Autonomy becomes ambiguous in a very precise way. Greater delegation can feel like freedom - the system does more so you do less. But when command friction drops far enough, the system doesn’t just execute. It predicts. It pre-loads. It suggests. It shapes the space of available action.

The boundary between “helpful” and “steering” becomes a design decision.

And design decisions compound.

TrustIndex pressure: collapsing command friction raises Autonomy pressure - and makes the steering boundary the most consequential product decision in the stack.

5) Commerce friction

Meta is not building a Cocoon in a vacuum. It also has an economic engine. The more honestly we treat that engine, the clearer the strategy becomes.

Meta’s Q3 2025 results are a reminder of the scale - revenue of $51.24 billion for the quarter, with ad impressions and ad pricing both up year-on-year.

That isn’t just a profit story. It’s a feedback story. A system trained to optimise outcomes across billions of people daily is the perfect host environment for automated persuasion.

Advertising has always been a mediation business - connecting attention to outcomes. But in a Cocoon architecture, advertising doesn’t have to remain an interruption. It can become integrated at the level of context and intent.

Meta’s direction for 2026 is end-to-end ad automation with Advantage+ - the advertiser supplies a product and a budget, and the system generates creative variations, runs targeting and placement decisions, and optimises. Marketers shift from executor to auditor.

Once you combine that with the rest of the stack (a continuous lens capturing context, a mediation layer shaping interpretation, and an input layer closer to intention) you get something new.

In a page-based web, ads sit alongside content.

In a place-based interface, the question becomes - what properties of the environment are being shaped by commercial incentives? What gets surfaced as relevant? What feels natural? What becomes the default option? Not because you chose it, but because it fits the architecture.

No moralising required. This is just the economic physics of a system that earns money by making mediation effective.

TrustIndex pressure: as Fidelity and Reality intensify, they become monetisable - putting Transparency and Equality under pressure.

Why open weights makes sense here

Meta’s decision to release powerful models as open weight isn’t charity, and it isn’t ideological purity. It’s stack strategy. If you don’t make money selling intelligence, you can afford to commoditise it.

Open weights standardises the substrate. It invites the global developer community to build optimisations, tooling, and applications on top of Meta’s architecture. It expands the ecosystem of worlds, agents, and integrations without Meta having to create every unit of content itself. And it prevents a competitor from owning the foundational layer in a way that would force everyone else to pay rent.

But notice where this lands - not just in developer tooling, but in social surfaces.

Meta AI Studio is the clearest example. It’s not “a platform feature”. It’s a mechanism for filling the Cocoon with agents (characters, helpers, and AI-extensions of real people) without Meta needing to author them one by one.

In that world, the moat migrates to what Meta already has - distribution, the social graph, and the interface layer. The parts of the loop that sit closest to human life.

In other words - give away the engine so you can own the roads.

The continuity from the social media era

It’s tempting to talk about this as “new”. But the sharper view is continuity.

Meta has already run an experiment at planetary scale with a feed-shaped interface.

That experiment produced enormous social and economic value. It also produced real harms. Most importantly, it demonstrated something structural - if you control the interface through which people perceive each other, you can shape the emergent reality of a society.

The Cocoon era doesn’t change that principle. It extends it. It moves the interface closer to perception, closer to language, closer to intent, and closer to action.

Which means the second-order effects (positive and negative) will definitely not be smaller. They will be upstream.

There’s also a subtle psychological continuity. People don’t generally adopt a new interface because it’s “more advanced”. They adopt it because it reduces effort while increasing coherence. It makes the world feel easier to navigate.

It’s convenient.

That’s why the TrustIndex perspective matters here. In an environment saturated with synthetic media, trust stops being a moral stance and becomes an operational requirement. The system that can keep AI-Mediation coherent (not just impressive) earns the right to sit in the middle.

Seeing the whole strategy at once

When you look at Meta one initiative at a time, everything can seem reasonable.

A translation model here. A generative assistant there. Better ad automation. A wearable that sells surprisingly well. A new glasses line for a different audience. A remix feed that quietly trains creation behaviour. Some wrist-interface work. “Segment Anything” primitives that make reality editable. A research pipeline trying to drag input upstream.

Each interesting. Each impressive.

But when you step back, you can see the whole architecture. This is not a company optimising for “best model” as a trophy. It’s a company tightening a loop.

WorldGen and world models make generation behave like an environment rather than a file.

Seamless turns language into a membrane - and then pushes beyond language into rapport.

Ray-Ban Meta (and Oakley Meta) turn that membrane into something closer to a continuous lens.

SAM variants and vision backbones make the world decomposable - so mediation can grab hold of it.

The Neural Band shifts input toward intention.

AI Studio populates the layer with agents.

And ad automation turns the entire thing into a commercial engine that can operate at the level of context.

Each element is useful on its own. But together, they compound.

A tighter loop increases adoption. Adoption increases data and context. Context improves AI-Mediation. Improved AI-Mediation reduces perceived friction. Lower friction tightens the loop.

Rinse and repeat.

This is why the strategy is easy to miss. It arrives as incremental improvements to things you already use. And it’s packaged as convenience.

A note on failure modes

A Cocoon architecture has a particular kind of brittleness - the better it works, the more it becomes relied upon. When AI-Mediation sits between you and reality by default, certain failures become existential.

If generation hallucinates in ways that matter (not as a funny bug, but as a causal error) coherence collapses.

If the hardware supply chain can’t meet demand, the continuity story breaks.

If privacy and surveillance concerns trigger backlash or regulation, it forces the interface layer back into “optional tool” territory.

None of this is a verdict. It’s simply the nature of operating at the layer where people experience the world.

The TrustIndex lens

When an AI-Mediated layer becomes the default interface, the system comes under pressure to increase Autonomy (delegation), intensify Reality (AI-Mediation), raise Fidelity (everyday indistinguishability), manage Transparency (inspectability under attention constraints), push Portability downward (switching costs), and shape Equality (who benefits, who is mediated well, who is left behind).

What’s striking about Meta, viewed through this lens, is not any single breakthrough.

It’s that a major player has already made such meaningful progress on all fronts.

While most of the industry conversation is still staring at benchmark charts, the interface is thickening as the loop is tightening.

And it’s happening right here, in front of you. Now.

The TrustIndex isn’t just a measurement - it provides a map of how the pressures are changing. And if everything is changing this fast, what can you rely on?

Join in as we track this slope and stay up-to-date. You can always find the current dashboard at TrustIndex.today and subscribe for regular updates as new Signals arrive, weekly Briefings are published and new Reports are released.