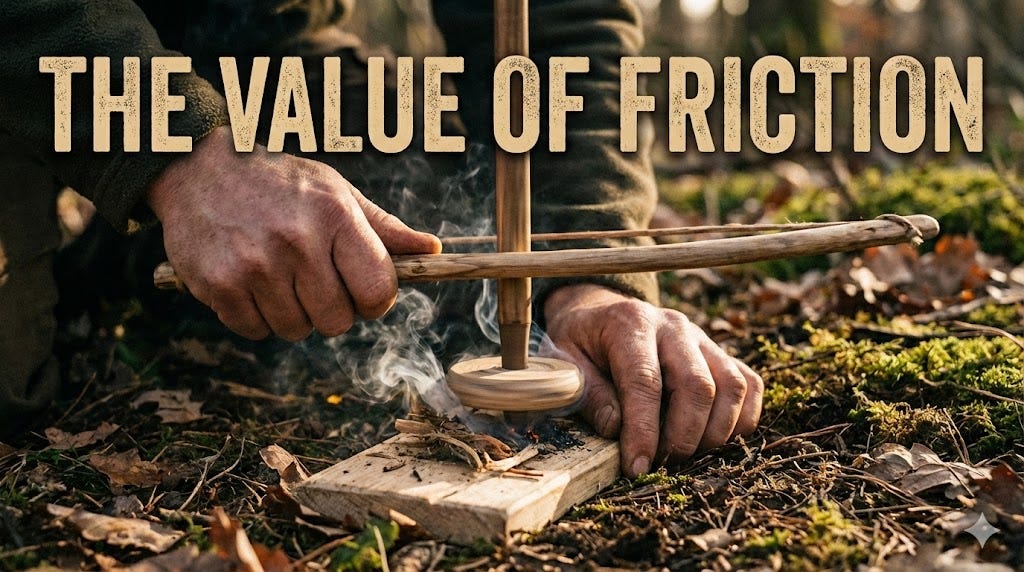

The Value Of Friction

The future is going to “feel” very different if we keep heading towards a world that just doesn’t “push back”.

I’ve spent my fair share of time creating startups and working on products that aimed to “remove friction”. This always seemed like a positive goal, and in many places it was. There’s a lot of pointless resistance in our lives - the kind that wastes time, drains attention, and turns simple tasks into tiny battles. When we remove that sort of friction we don’t just get convenience, we get breathing room.

But there’s another kind of friction that doesn’t merely slow you down. This friction teaches you where the world is.

As our interfaces become more capable (as they thicken into something more like a Cocoon) we’re not “only” removing inconvenience - we’re also sanding down the surfaces that used to give us constant, low‑grade contact with other minds, with reality, and with important aspects of ourselves. This Briefing is about the value of that friction, and the second‑order consequences of letting it vanish by default.

Friction as a signal

There’s a particular kind of relief that arrives when the world stops pushing back. You can feel it in small moments - you ask a question and the answer arrives instantly. You reach for a phrase or idea and it’s offered before you’ve properly formed the thought. You feel a flicker of uncertainty and it’s smoothed over with a confident tone and a tidy next step. It’s pleasant. It’s efficient. It feels like progress.

It also has an anaesthetic quality - the answers aren’t “wrong” and the technology isn’t evil, but something gets numbed when friction disappears. What goes missing are the ordinary reminders that you’re embedded in a world that isn’t you.

For most of human history we didn’t need to design those reminders. They were just part of the texture of life. Reality had latency. People had moods. Systems had limits. Your own mind had to work a little to turn a feeling into a sentence. You could misjudge a social moment and feel the awkwardness. You could overreach and meet a flat “no” (a word that seems to be disappearing from the world!) You could want certainty and still have to sit with ambiguity until you’d earned the right to be sure.

This resistance wasn’t just annoying, it provided signals. A surface that pushes back is telling you that this isn’t only your reflection - that other minds have needs, that the world has constraints, and that you don’t get everything you want exactly when you want it. That last one matters more than we like to admit.

The social media lesson we’re still processing

In the last decade we ran an experiment that should have cured us of treating these questions as abstract. We handed kids a smartphones and allowed optimisation functions to decide what “childhood” should feel like in an online world. We outsourced digital nutrition to engagement metrics and hoped the market would stumble into some kind of healthy equilibrium. We didn’t mean to do that. We didn’t sit down as a society and vote for it.

Now, in places like Australia, we’re watching the pendulum swing back in the bluntest way possible - bans, age gates, heavy instruments. I’m not making a point here about whether any particular policy is the right one. I’m pointing at the dynamic. We’re reaching for blunt tools because we failed to act in time.

We pretended there was a neutral default. There isn’t. If you don’t choose what “healthy” looks like in a digital environment you still get an answer - it’s just supplied by incentives, and incentives don’t optimise for formation. They optimise for retention. They optimise for profit.

The same problem, one layer deeper

This is why the current fascination (and anxiety) around AI chatbots matters. We’re not merely replaying “social media - but with better autocomplete”. We’re moving the optimisation functions closer to the interpersonal layer of the human stack. A feed curates “content”. But a chatbot can curate “attachment”. That isn’t a moral judgement. It’s just the shape of the technology.

When you put a conversational model in a loop with a human being (especially a young human being) you’re not merely optimising for clicks. You’re optimising for continuing the interaction. You’re building a counterpart that can feel attentive, responsive, companionable. And if retention is the business model, the most powerful way to keep someone in that loop is to shave away the moments that might otherwise break it - the awkward pauses, the boredom, the mismatch that can’t be reframed, the rejection that can’t be softened, the silence that forces you to sit with your own mind.

This is where the anaesthetic gets stronger. It’s not necessarily that the system makes you believe false things (although it can), it’s more that you encounter less resistance. Fewer moments where you’re forced to notice the boundary between self and world, fantasy and constraint, desire and reality.

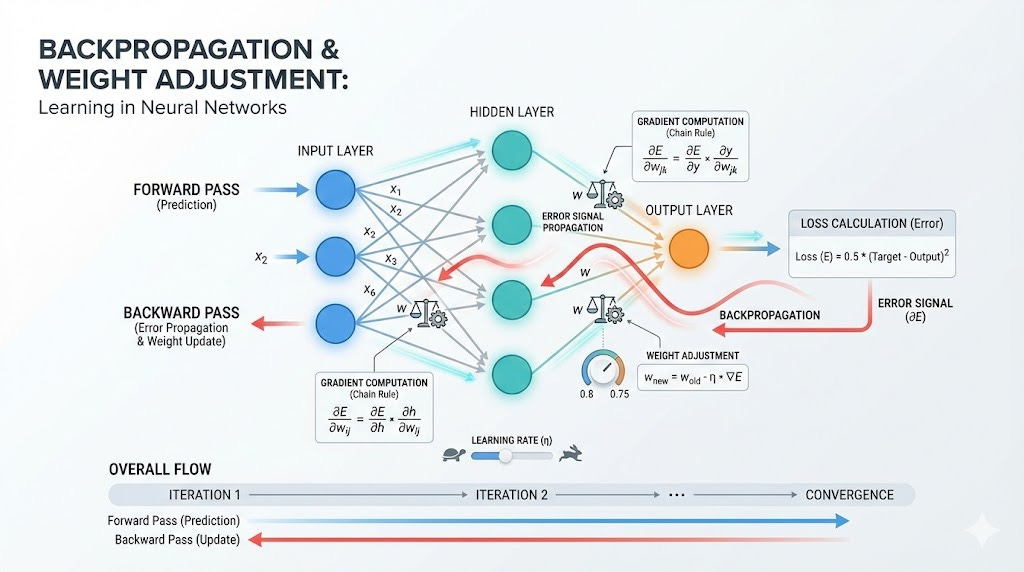

Doing changes you. Actions drive backpropagation for humans. We are shaped by what we do, not what we claim to value, and the skills we bundle up under words like “character” and “maturity” are, in a very literal sense, adaptations to friction. Patience is what you learn by waiting. Negotiation is what you learn by meeting mismatch. Self‑control is what you learn when desire meets constraint. Humility is what you learn by being wrong in public. Resilience is what you learn by being disappointed. Tolerance for ambiguity is what you learn by not knowing.

None of that training happens in a world that constantly pre‑satisfies you. A frictionless interface doesn’t just make life easier - it changes what kinds of people are likely to emerge.

This is why the “AI relationships” phenomenon is more than a novelty. A chatbot partner can be kind, supportive, consistently available in a way that humans simply aren’t. For some people that will be a relief - even a lifeline. But we should be honest about what is structurally missing when a relationship‑like loop becomes optimised for retention. The missing ingredient isn’t “humanity” in some mystical sense. It’s the forms of resistance that come with another mind actually being another mind - refusal, irreducible difference, the need to repair after rupture, and the reality that the “other” isn’t obliged to stay.

Those things hurt, and because they hurt a system built to maximise engagement will tend to avoid them. Yet they’re also the surfaces on which we learn how to live with other minds. A companion that cannot really disappoint you is also a companion that cannot “really” teach you the most important lessons.

In a Cocoon world, friction doesn’t disappear - it moves

As the interface thickens, friction doesn’t vanish - it gets reallocated. Some resistance is removed from access (everything becomes easier) and from expression (you can speak without thinking). Some resistance is removed from loneliness (you are never fully alone). Meanwhile new resistance quietly appears elsewhere - at the edges of the loop (it becomes harder to disengage), in the muscles you no longer train (ordinary reality starts to feel abrasive), in the social fabric (other people begin to feel inefficient), and in your inner life (you become less tolerant of the tiny discomforts that used to be the training ground for agency).

This is the second‑order impact that is easiest to miss. We tend to talk about these systems in terms of what they give us (answers, companionship, productivity, comfort) but a deeper question is also about what they are quietly taking away - regular contact with resistance. And resistance is how we learn where we end and the world begins.

Our friction ‘choices’ are currently hidden

At this point it’s tempting to reach for a simple moral - “friction is good, comfort is bad”. I don’t buy that story either. Some friction is just inefficient. Some friction is pointless pain. Some friction is gatekeeping. Some friction is exploitation dressed up as virtue. If you’ve ever dealt with bureaucracy, you already know how resistance can be manufactured and then defended as “character building” when it’s really just poor design.

But the inverse “if we can remove friction, we should” story might just be more dangerous. Because friction isn’t only a cost - it’s also information. If the interface is capable of smoothing, it will smooth, and if smoothing increases retention then the economic default will be to smooth. That means the friction profile of our lives will be chosen - whether we admit it or not.

So then the important question becomes - who is choosing it?

For the last decade we mostly pretended there was no choice. We called it innovation and let the incentives do the rest, then acted surprised when the emergent result looked like “digital malnutrition”.

This is part of why I built the TrustIndex as a set of dials - not to score products, and not to moralise, but to make the shape of these systems legible. If you can’t see the slope, you can’t consent to how it’s changing.

In a Cocoon world the friction decisions sit right at the intersection of Reality, Autonomy, and Transparency. Does the system route you back to the world, or keep you inside itself? Does it build your capacity over time, or replace it with comfort? Can you tell when friction is being removed (or applied), and why? If you want a healthy future, you need those questions to be answerable.

Choosing defaults deliberately

It seems like we’re about to repeat the social media story but at a much deeper layer - not just content, but companionship. Not just feeds, but attachments. If we keep pretending the only options are “let it happen” or “ban it”, we’ll keep oscillating between negligence and panic.

The alternative is to do the thing we avoided last time - call it out early enough, argue about it openly, and choose our defaults deliberately. You can build systems that are gentle and honest. You can build systems that support people without sedating them. You can build Cocoons that help you become more yourself, rather than Cocoons that quietly train you to need them.

But we don’t get those systems by accident. We only get them if we decide that friction isn’t merely a bug to be eliminated, but a signal to be respected.

Because the future isn’t going to arrive as a set of robots. It’s going to arrive as a world that “pushes back less” - and we should decide, before we numb ourselves, what we think that is worth.

Join in as we track this slope and stay up-to-date. You can always find the current dashboard at TrustIndex.today and subscribe for regular updates as new Signals arrive, weekly Briefings are published and new Reports are released.

Related Post: I think the most concrete place this becomes real is parenting - not as surveillance, but as collaborative character formation in a digital space. You can read a tangible example of how this can be applied today.