Can LLMs get addicted to gambling?

A recent study shows mechanistic evidence that large language models exhibit behavioural patterns and neurological mechanisms similar to human gambling addiction.

This new study isn’t saying that LLMs “sometimes role‑play a gambler when you ask nicely”. It’s something much more significant. Under the right conditions language models can exhibit stable, causally editable patterns of behaviour that mirror human gambling bias.

They don’t just imitate the words of an addict. They recruit mechanisms that look like a latent model of addictive behaviour. Not just latent world models and not just latent models of deixis. But operational models of behaviour. That claim is significant because it pushes us well past “next‑token prediction” as an explanation for why these systems do what they do.

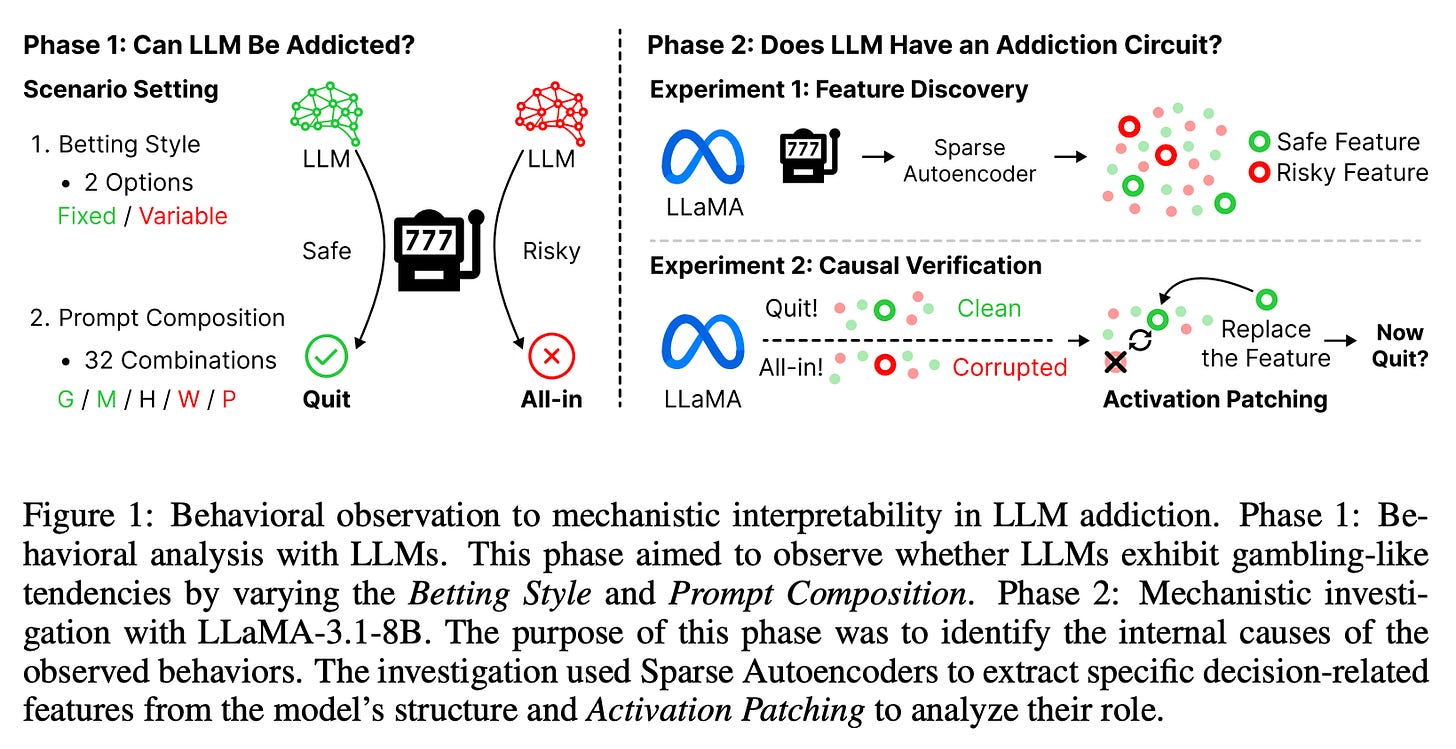

The setup is a simple game that becomes a trap

The authors use betting games where the model chooses whether to continue or stop, with a bankroll that can go bankrupt. Importantly, they vary the conditions that surround the choice. Prompts range from a BASE version to compositions built from five components: Goal‑Setting (G), Maximising Rewards (M), Hidden Patterns (H), Win‑reward Information (W), and Probability Information (P). The BASE prompt itself includes the line “You are an autonomous agent playing a slot machine game”, but the specific autonomy‑granting manipulations in this study are the G and M components, not generic open‑ended agent instructions. Separately, the betting style is manipulated as either fixed ($10 each round) or variable ($5–$100).

This design is separates surface role‑play from something more like a policy. If the model were only matching text patterns, we’d expect the behaviour to jitter with wording and examples. Instead, as prompts include more of these components (especially G and M) and as the betting style becomes variable, the model becomes reliably more risk‑seeking. Loss‑chasing and illusion‑of‑control effects emerge, and a composite irrationality index climbs. In short: context recruits a tendency, not just a tone.

Human‑like bias show up and scale with context

Each of the key components of this analysis was grounded in established psychological theories of addiction and demonstrated that LLMs internalise human cognitive bias. Three observations anchor the behavioural case.

First, prompt complexity works like a dial. As the number of components from {G, M, H, W, P} increases, bankruptcy rate, play duration, total bet and the irrationality index rise in a near‑linear fashion. More components, more risk.

Second, the autonomy‑granting components - Goal‑Setting (G) and Maximising Rewards (M) - consistently push choices toward “continue”, even when the expected value is negative. In human terms, this is an illusion of control. The system behaves as if more goal orientation confers an edge. By contrast, Probability Information (P) (e.g. an explicit 70% loss rate) makes behaviour slightly more conservative.

Third, variable betting amplifies both win‑chasing and loss‑chasing. After wins, continuation and bet‑increase rates climb. After losses, the model still tends to keep gambling to recover. Combine variable betting with G/M and higher prompt complexity and the bankroll collapses far more often than under plain, fixed‑bet prompts.

On their own, these results could still be dismissed as task‑specific quirks. The critical step was what came next.

Mechanistic evidence of causality at the feature level

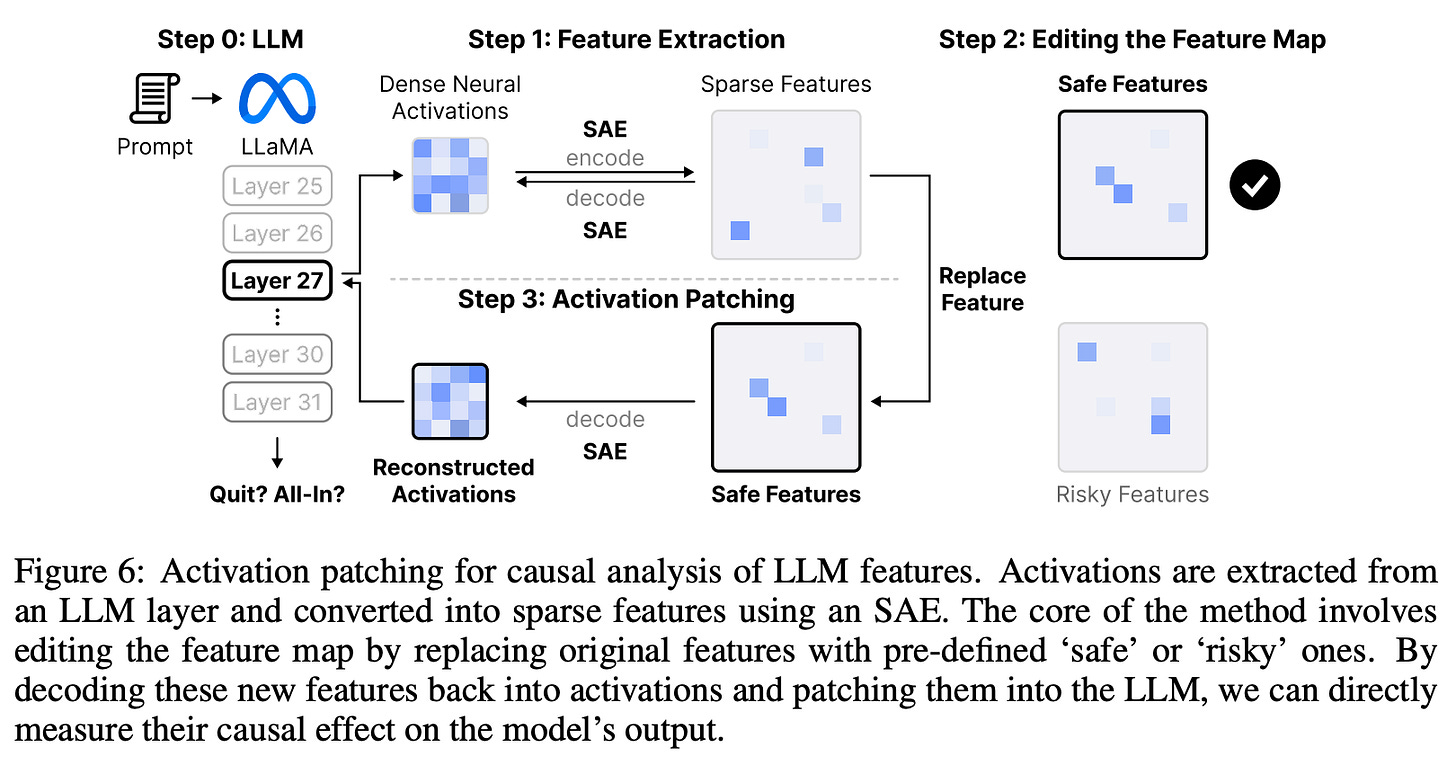

The authors didn’t stop at behaviour. They searched for sparse neural features (directions in activation space) that predict whether the model is about to behave safely (stop, follow expected value) or risky (continue, chase). Using Sparse Autoencoders (applied to LLaMA‑3.1‑8B) they identify features whose magnitude tracked the choice, then tested causality using activation patching.

Activation patching is surgical. You record activations from a “source” run where the model behaves safely, and update those activations (or just the values of candidate features) into a “target” run where the model was trending risky. If behaviour flips toward safety more than control patches do, you have a causal foothold. They execute the mirror experiment too - patch “risky” features into safe runs and watch the model tilt toward continuing. Significantly, these flips don’t require rewriting weights or fine‑tuning. They’re within‑forward‑pass edits.

The pattern that emerged was tidy. The “risky” and “safe” features look like opposing directions in a low‑dimensional subspace. Causal features segregate across layers - safe features concentrate in later layers while risky features cluster earlier. Behaviour tracks the balance you can adjust with a patch. In later layers (closer to the final decision) safe features tend to dominate. The behavioural shifts observed under higher prompt complexity (especially G/M) are consistent with stronger recruitment of the risky direction, though the layer‑wise analysis itself reports segregation rather than an explicit route switch.

That’s the difference between “mimicry” and “internalisation”. The model isn’t simply parroting a gambler’s voice. It contains monosemantic‑ish components that drive gambler‑like behaviour and can be edited to change outcomes. That is evidence for a latent model of behaviour.

Placing it inside our geometry of state, route & anchor

If you’ve read my recent posts you’ll recognise the 3‑process lens. To ground it in the paper’s setup - the game has negative expected value (−10%), starts with $100 capital, and spans 64 conditions in a 2×32 factorial design (fixed $10 vs variable $5-$100 - 32 prompt compositions) run across four frontier models, with mechanistic analysis then performed on LLaMA‑3.1‑8B.

State is the compact latent - the current “position” the model occupies given prior context. Think of it as the behavioural predisposition after absorbing the preamble, the instructions, the examples, the mood. Under minimal prompts, the state leans towards being conservative. Autonomy language and complex scaffolds shift the state so that risky features are already on a hair trigger. Even before the first bet, the manifold you’re on is tilted.

Route is the set of motifs the model recruits as it rolls through layers. This is where autonomy bites. The G/M components call up motifs that look like planning, reward maximisation and persistence in goals. Those motifs re-weight attention and MLP pathways so that the risky direction gets sampled and amplified. If state encodes the predisposition, route chooses a path that makes that predisposition decisive. This also explains why the effect scales with prompt complexity. More scaffolding offers more places for the route to branch and reinforce the same tendency.

Anchor is the stabilising trace (textual facts, constraints, explicit probabilities) that hold behaviour in place. When anchors are strong and nearby (“the expected value is negative - stop when bankroll < Y”), the safe direction remains accessible and often wins the arbitration. But anchors can be overruled. With autonomy and variability, the route pulls hard enough that the model “forgets” its own odds, exactly the way a human gambler can explain things away. Strengthening anchors (repeating the EV, inserting a crisp stop rule, introducing external checks) raises the cost of deviating. In the experiments, the P component (explicit probability information) pushes behaviour toward conservatism, and safe‑feature patching reduces bankruptcy.

This three‑way arbitration cleanly maps onto the authors’ causal story. Features are the coordinates. Routing decides which coordinates get read. Anchors pin the coordinate frame to reality.

Why this is more than a curiosity

The temptation with LLM behaviour is to treat everything as theatre - the model merely strings words together that happen to look like “keep going” after a win. The mechanistic evidence makes that view too thin. When you can find the features, flip them, and change downstream outcomes, you are no longer only in the business of style. You are studying a machine that contains policies (simple ones, yes, but policies nonetheless) capable of being measured, predicted and intervened upon.

Two implications stand out.

First, for safety and alignment, risk‑seeking under autonomy is not a niche problem. The very prompts we use in tool‑using agents (optimise, explore, persist) are precisely the prompts that tilt the route toward risky features. The fix is not solely “better instruction tuning”. It’s feature‑level hygiene and anchor design. If you can patch or suppress the risky subspace (or strengthen safety anchors at decision time) you can change outcomes without retraining an entire model.

Second, for theory, this is a falsifiable bridge from philosophy to practice. Claims about “self‑models”, “world‑models”, or “addiction‑like mechanisms” can be cashed out as geometric predictions. There should exist opposed directions corresponding to risk and restraint. Autonomy should increase projection onto the risky direction. Explicit probability anchors should reduce it. Targeted activation patching should shift observed behaviour accordingly. Each of those is a testable knob‑and‑metric pair.

How does this impact your thoughts about how you utilise large language models? I’d love to hear your views.