The '3-Process' View Of How LLMs Build 'Latent Models' In Context

Why do Large Language Models feel so brilliant one moment and so bafflingly fragile the next?

Last week I published the “parrot vs thinker” article that explores this binary debate - either large language models are remixing surface patterns with astonishing effectiveness or they are learning something like internal models of the world - enough to track beliefs, hold a point of view and even plan. But my aim was to avoid the binary “claims” and instead focus on exploring how the internal machinery of LLMs can allow them to build useful “latent models”. What I introduced in this article was just the core of this “latent model” view and my research/experiments (and a lot of other people’s) support an even more nuanced view.

Why do LLMs feel so brilliant one moment and so amazingly fragile the next? One minute they hold a consistent point of view across a long conversation and the next, a trivial change in word order sends their logic off a cliff. In reality the answer isn’t a simple binary of “parrot vs. thinker”. The reality is far more interesting: we’re witnessing a hybrid machine that constantly builds and borrows models in context, switching tactics as the prompt evolves.

This post sketches out this more nuanced “3-process” perspective of “model building” in LLMs. It explains both their fluency and their brittleness, then looks at how two recent papers (on recursive latent reasoning and on reinforcement learning for procedural “abstractions”) fit cleanly into this story.

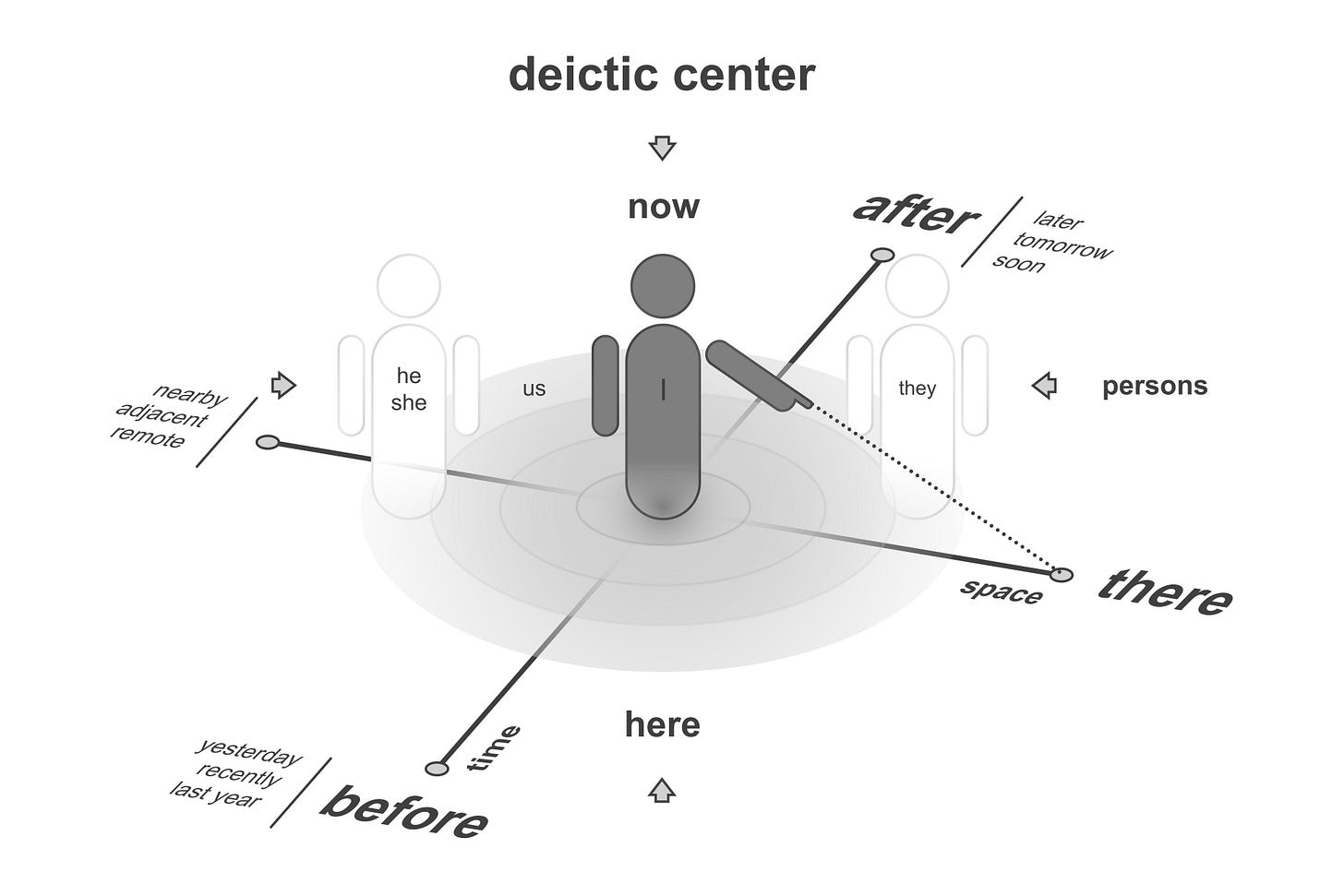

Beyond deixis

Change a single pronoun and the stance in an LLMs response flips, or move a role tag and suddenly the same model sounds like a different person. That’s not magic (just the physics of attention) but it hints at something deeper - models track who is speaking to whom, and tune their language accordingly. Those are variables you usually keep in your head, not in your prompt.

If you know where to look for them, you can find linearly decodable traces in the late residual stream - speaker/addressee, local tense, near/far deixis, politeness. Patch those traces from one run into another and the behaviour follows, or surgically weaken them and the behaviour softens. That looks a lot like a compact latent state - written at one layer and then read later. But it doesn’t mean everything is linearly separable, and it doesn’t rule out richer nonlinear structure. It just adds weight to the “there’s a there there” claim.

At the same time, the more you push models off their happy path (longer contexts, scrambled premise order or subtle wording changes) the more you see something else - routes rather than states. You could have strong local representations and still watch the model “miss the turn” if a connective moved or an example appeared in a different order. And of course there’s the third ingredient we all met on day one - the prompt itself as an anchor. Duplicate the system instruction and change the role marker then the opening sentence shifts. This creates large swings with minimal change to the internal geometry.

Those 3 observations (state traces, route fragility & anchor pull) are the core of this “3-process” perspective.

The 3 processes

Process 1: A compact latent state

Think of this as the model’s short-term workspace - like jotting a key fact on a notepad. Mid-to-late layers (model-dependent) write a small code into the residual stream (call it z) that captures a useful variable for the current span: “I vs you,” the current plan step, or which entity is under discussion. Later components then read z cheaply. These are the cases where linear probes pop, where subtle feature edits flip a pronoun or stance with surgical precision and where behaviour is relatively robust to moving the furniture around in the prompt. When models feel consistent, this state usually wins. This is precisely where the “latent deictic model” (LDM) I discussed in my previous article lives - a compact, reusable state that tracks roles like speaker/addressee, time, and place.

Process 2: Recomputed latents (routing motifs)

At other times the model doesn’t maintain a clean z. It re-derives what it needs on demand by running a little procedure - like re-calculating a math problem from scratch when you need it. It gathers evidence with attention, composes with an MLP, uses the result, then tosses it. You can call these routing motifs - recurrent subgraphs you can spot across inputs with similar control flow. Behaviour in this regime is flexible but brittle: order matters, phrasing matters, long-range dependencies wobble. Here, finding a single, isolated ‘state’ with a probe is difficult. Instead, the logic is distributed along a pathway, and disrupting that entire route is what causes the behaviour to break.

Process 3: KV-runtime anchoring (pointers to the past)

Finally, some persistence comes from not compressing anything at all. Early tokens (system prompts, role tags or initial examples) remain high-leverage entries in the key–value cache - like keeping a finger on the first page of a book. Other layers keep looking them up. This is why moving the opening instruction or segmenting the cache can reset a persona. It’s also why “first token gravity” threads through interpretability papers - there’s a gravitational centre inside many prompts, and it lives in the cache.

Most real prompts are likely to recruit all 3 processes. The model seeds routes off anchors. If evidence stabilises, it consolidates a compact z. When that state is weak or conflicted, it falls back on re-computation. The result is the peculiar mix you might recognise - answers that feel coherent on moment and then a paragraph later, fall apart because some silent route flipped.

Why this hybrid explains both grace and brittleness

The grace is amortisation - once z is written, the model can read it cheaply, so you get a steady perspective. That’s why mid-layer workspace results keep showing up - intermediate layers often carry richer, more stable structure than the final layer, which is busy with the last-mile label.

The brittleness is arbitration - the model is constantly deciding which process should lead. Sparse evidence? Route. Strong repeated cues? Write z. Heavy, well-placed instructions? Anchor. Small adjustments (an added header or a re-ordered clause) can tip the balance. That’s not a bug in “the LLM”, it’s the consequence of squeezing state, procedure and pointer through the same residual and attention machinery.

A nice way to hold this in your head is local geometry. Sometimes a variable really is close to a single direction. But more often it’s a curved surface (a low-dimensional manifold inside the residual space) on which nearby points preserve meaning. In those regions, cosine looks like the right distance and linear readouts work. Outside them, you need to follow the curve or recompute. That reconciles the “monosemantic feature” successes with the messy reality of superposition and routing - layers carve temporary, task-conditioned pockets where readout is easy but the global space remains crowded.

The thread of evidence

We started with those decodable, causally editable state traces - Othello-style “board codes”, sparse-autoencoder features that toggle crisp behavioural changes and the boring but decisive trick of adding a learned direction to the residual and watching a stance flip while everything else stays put. That’s the state story.

Then we spent time with the classic in-context learning literature that reads transformers as learned optimisers - the model acts like it’s running one step of inference over functions implied by the examples. In practice, you see exactly what that story predicts - order effects, sensitivity to “few-shot noise”, and failure modes that go away if you remove a few heads along a particular route. That’s the procedure story.

Anchors were unmissable from day one, but the picture sharpened with analyses of attention sinks and “first-token pull”. The model’s gravity wells aren’t just poetic. They’re the way a network without explicit memory holds a stance over a long context. KV isn’t thinking, it’s reachability. But it shapes which route wins and which state stabilises.

Underneath those 3 threads, the mid-layer result keeps reappearing - representations before the logit lens look better (more structured and more reusable) than the final layer. That’s exactly where you’d expect a compact state to live and a routing motif to write intermediate invariants for later reuse.

The last piece that helped consolidate the story was the manifold view of representation. If features lie on low-D curved surfaces, you can see why small, local probes work and why long-range generalisation sometimes fails - the readout is linear in a patch, not globally. It also explains why narrow, geometry-aware edits can shift behaviour gracefully while blunt moves do harm.

Put together, the evidence point towards a more nuanced control policy that recruits state, procedure, and pointer opportunistically.

Two fresh results that strengthen the picture

A strong way to test a theory is to look for converging evidence from very different setups. Two recent papers do exactly that.

1) Persistent latent reasoning in a tiny recursive network

A minimalist study replaces heavyweight transformers with a tiny network that carries two explicit runtime features from step to step - the current solution y and a dedicated latent reasoning state z. The model updates z a few times, uses it to refine y, then repeats - no KV cache tricks, no sprawling attention map. Despite its simplicity, the system jumps in performance on structured tasks like Sudoku and mazes and does surprisingly well on ARC-AGI. The key insight for our purposes - a small, persistent z acts as a first-class workspace. Early steps look like route-heavy re-computation then as z stabilises, the behaviour looks like write-once/read-many state. That’s our Process-2 handing off to Process-1, made explicit and inspectable.

Paper: “Less is More: Recursive Reasoning with Tiny Networks”

2) Reinforcing procedural “abstractions” that later internalise

Another study trains a model to generate short, input-specific abstractions (little textual priors about how to proceed) and rewards those that help a solver succeed. Initially the abstraction is just a prompt-level scaffold. A strong anchor that steers routes. But here’s the interesting bit - over training, performance improves even when you remove the abstraction at test time. The procedural regularities have been internalised. In our language, strong Process-3 scaffolding and Process-2 routing gradually consolidate into Process-1 state. You can still use the prompts as crutches, but you don’t have to.

Paper: “RL with Abstraction Discovery (RLAD)”

Why do these two support this 3-process perspective? Because both show, in different ways, that compact runtime latents aren’t a romantic inference we’re projecting onto transformers. They’re a useful computational primitive that a model can adopt when it helps - whether that model is a tiny recursive MLP or a full-scale LLM. And both show the hand-off we keep seeing in the wild - routes and anchors are excellent scaffolds, but stable behaviour comes from writing a state you can cheaply reuse.

Now we have a more complete picture

This describes a more interesting animal. Sometimes it leans on the prompt as a pointer. Sometimes it learns to follow a procedure. And sometimes it writes a motif into memory as a state. The same animal using 3 ways of making meaning.

If you’ve been on the fence about whether LLMs “really model”, my view is - yes they do, but not always and not in just one way. They build small, contextual models because it’s a cheap path to good predictions. They abandon them when evidence is sparse or conflicting. And they keep a hand on the prompt because it’s the easy place to stash inertia.

The two papers above support this story:

Less is More: Recursive Reasoning with Tiny Networks shows that giving a system an explicit, persistent z makes the state-building pathway concrete (and powerful) even in very small models.

Reinforcement Learning with Abstraction Discovery shows that strong, external scaffolds can become internal structure over time - procedural prompts evolve into latent know-how.

Together, they support this 3-process story - anchors steer, routes compute, and when it’s worth it, the model writes things down. That’s not a parrot imitating thought - it’s a machine learning when and how to think using the mathematical machinery it has available.

Understanding this dynamic trio of state, route, and anchor isn’t just academic. It gives us a clear vocabulary for debugging these models and tangible levers for building more robust systems. Instead of just hoping for consistency, we can design prompts and fine-tune methods that encourage the model to build a stable “state” when it matters most.