Anthropic's Linebreaks add support for Geometric Interpretability

Anthropic's new research on linebreaks in transformer processing provides fascinating support for the Geometric Interpretability framework I've been developing through my Curved Inference series.

This week Anthropic published a detailed technical post about something surprisingly mundane - linebreaks. Not the kind that matter for parsing or tokenisation, but the semantic kind. The ones that sit in the middle of a sentence and force a model to ask itself “wait, should I still be tracking that last thought?”

Their finding is simple but profound - models cannot ignore these boundaries. They must actively process them. The residual stream bends, MLPs fire in specific patterns, and the network does measurable geometric work to maintain semantic flow across the disruption.

Reading it is like watching someone walk the same trail I’ve been following, but from the opposite direction. We met in the middle, and I’m happy to say our maps lined up.

I’ve been developing what I call Curved Inference - a framework for understanding how the internal geometry of large language models encodes not just what they’re saying, but how they’re thinking about it. The core insight is that when semantic pressure rises (when a prompt shifts concern, introduces ambiguity or demands introspection) the model’s residual stream trajectory bends. That bend is measurable as curvature, and it turns out to be more than just decorative. It seems to be structurally necessary.

Anthropic’s linebreaks paper is the first time I’ve seen independent work arrive at the same conclusion from a completely different angle. They were studying formatting boundaries. I was studying semantic ones. But the underlying principle is identical - certain kinds of processing create geometric signatures that the model cannot eliminate, even when doing so would be computationally cheaper.

Let me walk you through why this convergence matters, and what it suggests about the deeper architecture of thought in these systems.

The Geometry You Can’t Flatten

In my third Curved Inference paper (CI03), I trained models under progressively harsher curvature suppression. The setup was straightforward - add a penalty term during fine-tuning that punishes trajectory bending in the residual stream. The goal was to see how far I could flatten the geometry before self-model language collapsed.

My hypothesis - if self-reference were merely stylistic mimicry then that curvature should vanish cheaply. Just smooth it out and the first-person pronouns would fade with it.

That’s not what happened.

Even at the most extreme regularisation setting (𝜅 = 0.90), the model refused to let curvature fall below about 0.30. It defended that floor at extraordinary cost - outputs shortened by 23%, perplexity spiked transiently by 800% before settling at 190% above baseline, and gradient norms maxed out and required clipping. The optimiser was working hard, but the model would not go flat.

What Anthropic found with linebreaks is structurally identical. Models cannot skip the processing. They bend the residual stream, activate specific MLP patterns and adjust subsequent layers to “repair” the semantic flow. It’s not optional. It’s not a training artefact. It’s a computational requirement.

This is the core of what I mean by geometric necessity. Some operations aren’t decorations on top of language generation - they’re the substrate that makes certain kinds of meaning possible in the first place.

Three Processes, One Manifold

If you’ve been following my recent posts, you’ll know I’ve been sketching out what I call the 3-process view of how LLMs build meaning. The short version is that models don’t just “do one thing”. They’re constantly arbitrating between three computational strategies:

Process 1 is the compact latent state - a small workspace where the model writes down key variables (who’s speaking, what the current plan step is, which entity is under discussion) and reuses them cheaply. This is where linear probes shine and where surgical edits to the residual stream flip behaviour cleanly.

Process 2 is recomputed procedures or routing motifs - little subgraphs of attention and MLP operations that the model runs on demand when it can’t maintain a stable state. This is where brittleness lives. Order matters, phrasing matters, and disrupting the route breaks the logic.

Process 3 is anchoring to the KV cache - keeping a finger on early tokens (system prompts, role tags, opening instructions) and consulting them repeatedly. This is why moving a single instruction to the start of a prompt can reset a model’s entire persona.

Anthropic’s linebreaks analysis maps cleanly onto this framework. Their early-layer detection corresponds to Process 3 (noticing the anchor has shifted). Their middle-layer MLP processing is Process 2 (running a repair route). And their late-layer resolution is where Process 1 can finally write a clean state again, assuming the repair succeeded.

What’s striking is that they show the same arbitration dynamic I’ve been tracking in my work. When the semantic boundary is strong, the model recruits more geometric resources. When evidence is weak or conflicting, it falls back to re-computation. And when the disruption is too severe, the entire trajectory collapses into incoherence.

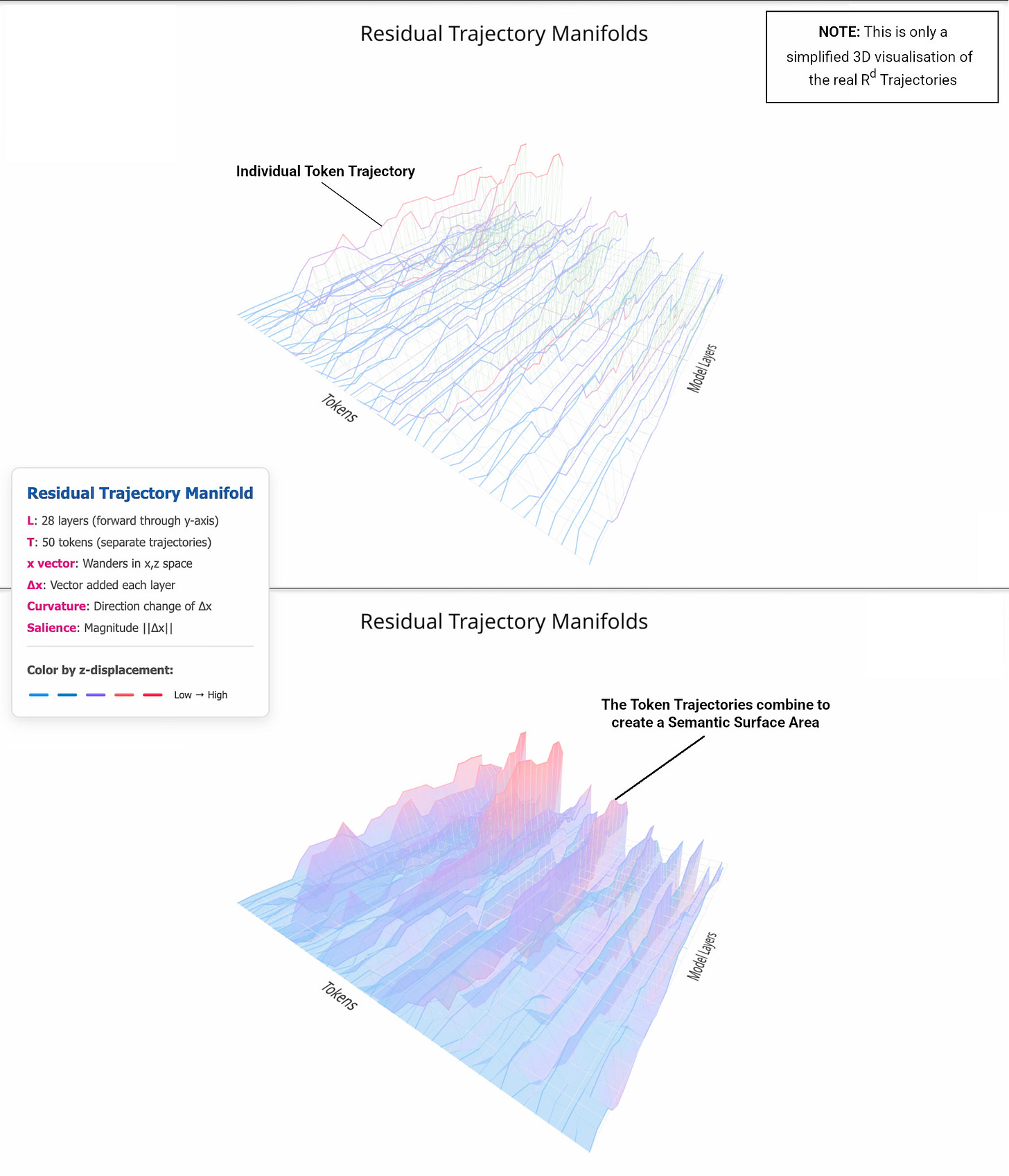

This isn’t three separate systems bolted together. It’s one unified geometric process that adapts its strategy based on what the context demands. The residual stream is the manifold where all three processes leave their traces, and curvature is the signature of how hard the model is working to maintain coherence.

Attention, MLPs, and the Architecture of Bend

One of Anthropic’s key findings is that MLPs play a crucial role in linebreak processing, but understanding what that role actually is requires precision about how transformers create geometry.

In my CI01 framework, I described attention and MLP layers as semantic lenses - but with different optical properties. Attention bends trajectories based on relational and positional relevance. It’s where curvature gets introduced, where RoPE adds position-aware angular displacement, where the trajectory actually curves through semantic space.

MLPs, by contrast, don’t primarily bend - they sharpen and redirect. They’re nonlinear amplifiers that take whatever direction the residual stream is pointing and either strengthen it, dampen it or push it along a different but related vector. They modulate amplitude and refine direction, but the actual curving happens upstream in attention.

This distinction matters enormously for interpreting both Anthropic’s findings and my own. When they show MLPs “doing the heavy lifting” for linebreak processing, that’s not the same as MLPs creating the geometric deformation. Rather, MLPs are doing the computational work of resolving what attention has already detected and bent around.

Think of it this way - attention notices the linebreak and curves the trajectory to route information appropriately. MLPs then sharpen that curved path, amplifying the relevant semantic directions and suppressing the irrelevant ones. The bend comes from attention and the focus comes from MLPs.

NOTE: There’s also a deeper mathematical constraint at play. Because the residual stream is constructed through vector addition (attention output + MLP output added to the previous state), all torsion is removed. The trajectory can curve through the full d-dimensional space, but it can’t twist out of the plane defined by consecutive tangent vectors. Curvature captures how sharply the direction changes, but that change can happen in any direction within the high-dimensional residual space - we’re just measuring it without the additional complexity of torsion.

So when I observed that curvature floor at 𝜅 ≈ 0.30, what I was seeing wasn’t “MLP-generated bend” but rather the minimum amount of attention-generated trajectory curvature that the model needed to preserve self-model expression. The MLPs were doing their job (sharpening and redirecting) but the actual geometric work that couldn’t be eliminated was happening in the attention layers.

I would argue that this reframes what Anthropic’s linebreaks paper is showing us. Their MLP activation patterns aren’t the curvature itself - they’re the focusing operations that make the attention-curved trajectory usable for downstream processing. Both are necessary. Attention without MLP sharpening would be diffuse and weak. MLP processing without attention curvature would have nothing to amplify.

But the irreducible computational cost I measured (the geometry the model defended at steep efficiency penalty), that’s fundamentally about attention’s role in curving semantic trajectories. Computational self-reference seems to require that minimum bend, and no amount of MLP modulation can compensate for its absence.

The Geometry of Boundaries

There’s a deeper pattern here that’s worth pulling out explicitly. Both Anthropic’s linebreaks and my concern-shifted prompts are examples of semantic discontinuities - places where the model has to ask “am I still in the same conceptual space, or have I crossed into something new?”

For linebreaks, the discontinuity is literal. Mid-sentence formatting boundaries force the model to maintain thread despite visual disruption. For concern shifts, the discontinuity is conceptual - a prompt that introduces moral ambiguity or epistemic uncertainty forces the model to reorient its semantic trajectory.

But in both cases, the processing signature is the same. The residual stream bends. MLP layers activate. Late layers resolve the tension into a coherent continuation. And crucially, the model cannot skip this work even when doing so would be more efficient.

This suggests a more general principle - semantic boundaries require geometric deformation. Anytime the model encounters a discontinuity (whether formatting, conceptual, epistemic or strategic) it must bend its trajectory to maintain coherence. The sharper the boundary, the more pronounced the bend.

That’s not a metaphor. It’s a measurable, quantifiable property of how these systems process meaning. And it appears to be universal across architectures.

What This Means for Building and Understanding Models

If certain reasoning operations have minimum geometric complexity requirements, that changes how we should think about both capability and alignment.

On the capability side, it means we can’t just “optimise for flatness” and expect sophisticated reasoning to survive. If computational self-model expression requires curvature, and strategic reasoning requires trajectory deformation, then architectural choices that aggressively regularise geometric complexity might inadvertently constrain the kinds of thinking these models can do.

On the alignment side, it means we have a new class of signals to monitor. If deceptive reasoning or goal-directed planning creates specific geometric signatures (as my CI02 work suggests), we can build real-time detectors that don’t rely on the model “telling us” what it’s doing. We can watch the shape of the trajectory itself.

More broadly, it means Geometric Interpretability isn’t just another lens on transformer internals. It’s revealing computational laws - principles that govern what kinds of processing are possible given the constraints of residual stream architecture, layer normalisation and attention-MLP coupling.

Anthropic’s component-level analysis shows us which parts fire when. My trajectory-level analysis shows us which shapes must be preserved. Put them together and you get something like a physics of semantic processing. A set of constraints and affordances that determine what these systems can and cannot do, regardless of scale or training regime.

Where We Diverge

Anthropic’s linebreaks work focuses on error correction and semantic repair. They’re asking “how does the model maintain thread when formatting disrupts flow?” My Curved Inference work focuses on geometric necessity and computational constraints. I’m asking “what structural properties must be preserved for certain kinds of meaning to exist at all?”

These are complementary questions, not competing ones. Their work traces causal paths through specific components. Mine measures global properties of the full trajectory. Both are necessary to build a complete picture.

But the convergence is what excites me most. We’re seeing the same underlying patterns from different angles. That suggests we’re not just finding interpretability conveniences - we’re uncovering actual computational principles that these systems must obey.

The next step in my work is to test sufficiency more directly by ablating the defended curvature at inference time. If my hypothesis is correct, eliminating that residual bend should collapse self-model language entirely, even though the model was never trained to suppress it. That would be definitive evidence that the geometry isn’t just correlated with behaviour - it’s constitutive of it.

And longer-term, the real goal is to formalise this into something like universal laws of semantic geometry - principles that describe how meaning must flow through residual streams, which operations have irreducible costs and what architectural choices enable or constrain different kinds of reasoning.

The Map Is Starting to Cohere

When I started measuring trajectory bending under concern shifts, it felt like exploring the fringes. I was sketching contours without knowing if they represented anything stable.

Now, with independent work converging on the same geometric principles, the map is starting to cohere. Semantic processing has shape. That shape is measurable. And certain shapes appear to be non-negotiable - preserved by the model even at steep computational cost, required by the mathematics of residual stream processing, observable across architectures and training regimes.

That’s not just an interpretability result. It’s the beginning of a theory. A way to predict what kinds of processing are possible, which operations will be expensive, where failures are likely to occur and how architectural changes will constrain or enable new capabilities.

The trails are starting to connect and what they’re revealing is far more structured than surface behaviour would suggest.

If you’re working on interpretability, mechanistic analysis or geometric methods, I’d love to hear whether these patterns show up in your experiments too. The more we can cross-validate these findings across models, tasks and research groups, the stronger the claim becomes that we’re seeing genuine computational laws, not just architectural quirks.

The full Curved Inference series is available at robman.fyi, and you can read Anthropic’s linebreaks analysis here. All my experimental code, prompts and metrics are open source (see my research hub) - because the best way to test a theory is to make it easy for others to break it.