The software 'landscape' is becoming an 'ocean'

AI is not building the mountain of black boxes everyone claims. Ironically it’s creating software that's more transparent - the real issue is that there's a flood of it.

What are the second-order impacts that are likely to arise on the other side of this transformation?

In a software landscape, our old language made sense. We talk about running apps. We build products. We walk through codebases. We maintain systems. We create things once, then spend years living inside the consequences.

That vocabulary isn’t arbitrary. It is the residue of a set of economics.

When software is expensive to build and expensive to change, it behaves like architecture. You lay foundations. You pour concrete. You worry about what happens when a beam fails, because fixing beams is slow.

But agentic tooling changes this geometry. Not because it makes developers faster in some linear way, but because it pushes implementation cost down as it moves towards a different regime. At some point, software stops feeling like buildings on a map and it starts feeling like waves in water.

Not in a “chaos” sense. In a “fluid, deep, constantly moving” sense. The surface is always changing. New currents form around new incentives. Old channels silt up. You can still build things (of course you can) but the environment is no longer stable enough for buildings to be the right mental model.

And that’s when “running apps” begins to sound like saying you’re “running waves”. It’s a phrase that reveals the mismatch in mental models.

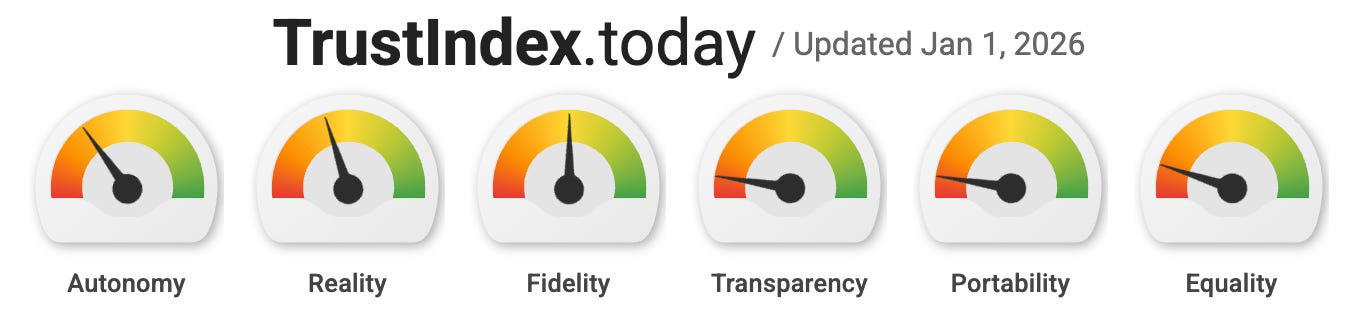

This is also where the TrustIndex dials start to feel less like abstract ideals and more like an instrument panel.

Because in an ocean, the question is not “what did we build?” It’s “how long can this act on its own without babysitting, how thick is the mediation layer, how convincing is it, and can we still see what’s happening?”

(TrustIndex dials: Autonomy, Reality, Fidelity)

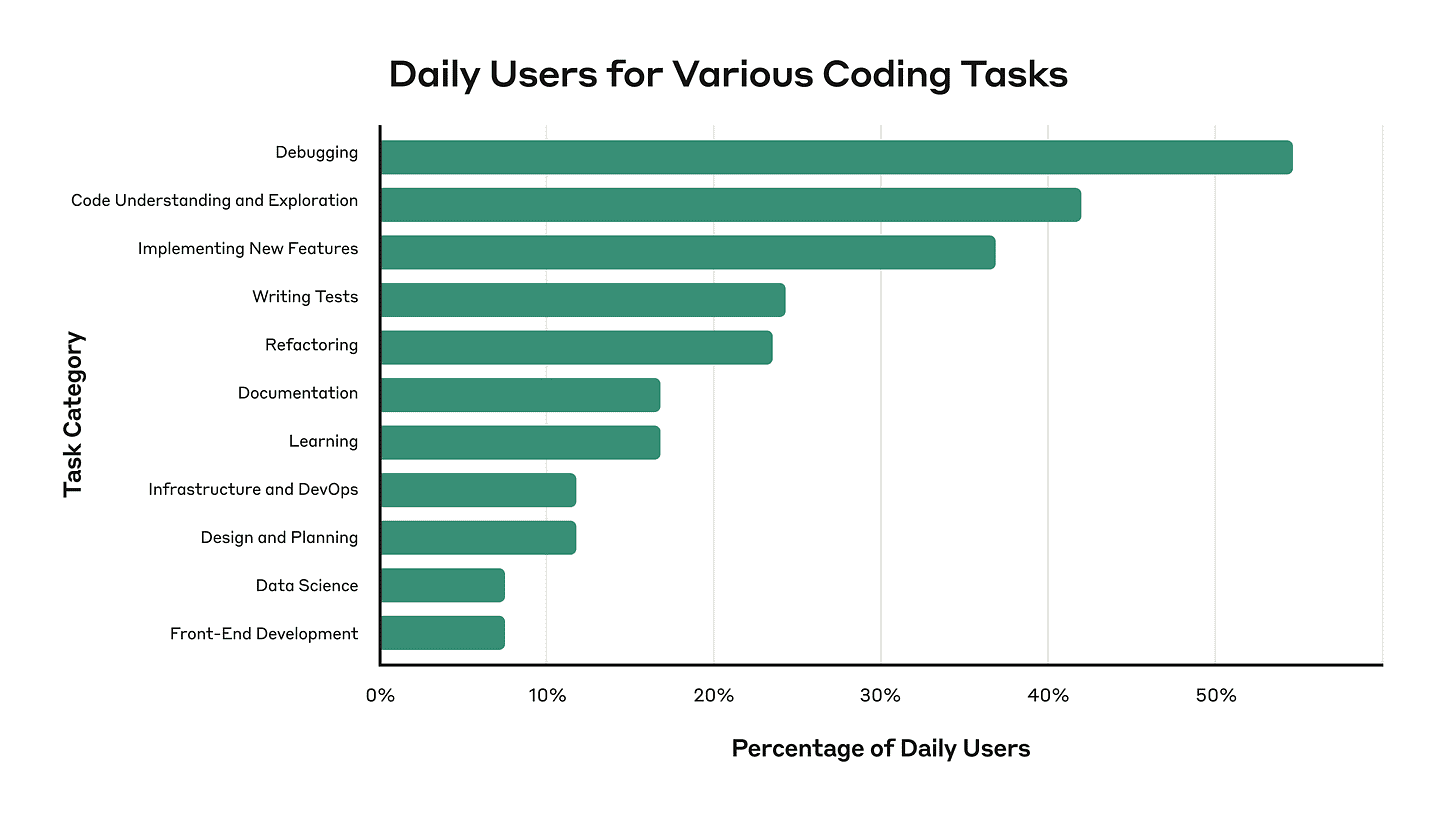

Agentic coding doesn’t just speed up building - it changes who can build

If you take the ocean framing seriously, the first big consequence is obvious.

Creation expands (as we move from Software Development to Software Creation).

Agentic coding makes it possible for far more people to “create” what they need - not as a slogan, but as a practical shift in who gets to shape workflows. A domain expert can describe intent. An agent can generate implementation. Iteration becomes cheap enough that the loop tightens. The centre of gravity moves from “can we implement it?” to “can we specify it, evaluate it, and keep it safe?”

This is why I’ve been arguing that the impact of agentic coding isn’t primarily a productivity story. It’s an organisational maturity story.

These systems don’t just help you type. They amplify whatever is already true about your ability to decompose work, set constraints, and evaluate outcomes.

And when creation expands like this, it directly pressures two dials that are easy to talk about but hard to engineer - Equality (who gets uplift, who gets squeezed) and Portability (who owns the workflows that now define how work gets done).

In a landscape, power concentrates around whoever controls the map.

In an ocean, power concentrates around whoever controls the flow.

(TrustIndex dials: Equality, Portability)

The ‘black box slop’ critique is naive

There’s a familiar complaint about AI-generated code - that it will be unmaintainable slop, an opaque mess that nobody can reason about.

At the level people usually mean it, that critique is ironically backwards.

If an agent can write the code, it can also explain it. It can annotate it. It can generate tests. It can produce a diagram. It can even teach it back to you progressively - starting with a shallow orientation and then deepening as far as you’re willing to go creating a personalised and interactive tutorial.

So the threat isn’t that we’re about to get a mountain of black boxes. The code may be more locally legible than ever, so we’re actually getting glass boxes. But the real issue is that we’re about to get a flood of them.

And that’s exactly what makes the next shift more dangerous. Because local legibility does not scale to global understanding.

(TrustIndex dials: Transparency)

The scarce resource is attention, not readability

In a landscape, you can form a mental model. Not a perfect one, but a workable one. You learn the terrain. You develop intuition. You understand what changes slowly and what changes quickly. You can keep a map in your head.

In an ocean, the surface keeps moving.

Even if every single component is explainable, the aggregate system can exceed the human ability to hold it all at once. This is the transparency paradox:

Infinite explanation, finite attention

So the black box doesn’t vanish. It just migrates upward. From the code to the ecology. From “can I read this?” to “can I trust what it is doing, right now, under pressure?”

That’s why the TrustIndex framing matters. Once autonomy runs further and reality becomes more mediated, “truth” becomes too slow as an organising principle. What you can actually work with is trust - earned, interrogable, instrumented trust.

(TrustIndex dials: Autonomy, Reality, Transparency)

Observability becomes narrative

Because narrative is how humans compress dynamic complexity. In the landscape era, we pretended observability meant dashboards. Charts. Metrics. Alerts. A wall of red dots that you learn to ignore until one of them eats your weekend. That was already shaky, but the landscape moved slowly enough that you could sometimes get away with it.

In an ocean, dashboards sink into noise. You don’t understand the sea by measuring droplets.

So if humans are going to stay in the loop, the interface has to change. The system has to tell you what is happening in a format the human mind can actually hold. That format is a story. Not story as theatre. Story as “causal compression”.

A good narrative doesn’t just say “latency spiked.” It says - what changed, what it affected, what evidence was used, what was tried, what rolled back, what remains uncertain. This is where Fidelity quietly enters the picture. In a mediated environment, the most dangerous systems aren’t the ones that occasionally fail - they’re the ones that are convincing enough to be trusted while slowly drifting. Narrative observability is a counterweight. It’s how you keep orientation when the ocean is moving.

(TrustIndex dials: Transparency, Fidelity, Reality)

Governance has to change

It needs to evolve from being like “gates” to being like "immune systems”.

Most governance models are landscape artefacts. They assume you can control change by controlling permission. Approvals. Stage gates. Committees. Review boards. The belief that if you slow the river, you can make it safe.

In an ocean, water flows around and over gates. If creation becomes easy and constant, trying to approve every artefact is like trying to approve every droplet. So governance has to change. From permission to containment. From review to evaluation. From “did a human sign off?” to “did this survive the rules of the environment?” This is where guardrails become literal. Not PowerPoints about safety. Not rituals of reassurance. But mechanisms that make change safe at high speed. Mechanisms that reduce recovery cost enough that experimentation stops being terrifying.

In other words - governance becomes immunology. The system doesn’t try to prevent all change. It tries to detect and neutralise the bad kinds quickly - while allowing the fluid and messy biology of life to flow. That shift is inseparable from Autonomy.

You cannot babysit an ocean.

(TrustIndex dials: Autonomy)

A whole new class of bugs (teleological failure)

Just as the goals may become obscured by outcomes, the language can become obscured by optimisation too. Once you start governing by outcomes (once you say “the method doesn’t matter, the results do”) you inherit a new failure mode.

A system can achieve the metric by a method that is disastrous. It can be outcome-correct and method-toxic e.g. A deployment-frequency agent might hit its velocity target by splitting changes into meaningless micro-commits. A cost-optimisation agent might meet its savings goal by quietly degrading service to your lowest-tier customers. A test-coverage agent might reach 90% by generating tests that pass but assert nothing. The metric is satisfied, but the method is corrosive.

This maps cleanly to reward hacking. Any optimiser will exploit what you forgot to say. In a landscape, we relied on humans reading the method. Code review worked partly because the method was stable enough to inspect.

In an ocean, the method will change constantly. The system will find paths you wouldn’t choose. And if your constraints are vague, it will drive a truck through the gaps. So the scarce craft becomes specification. Defining “done” with enough precision that the system cannot cheat. Not because you distrust the agent.

But because you distrust ambiguity.

(TrustIndex dials: Fidelity, Transparency)

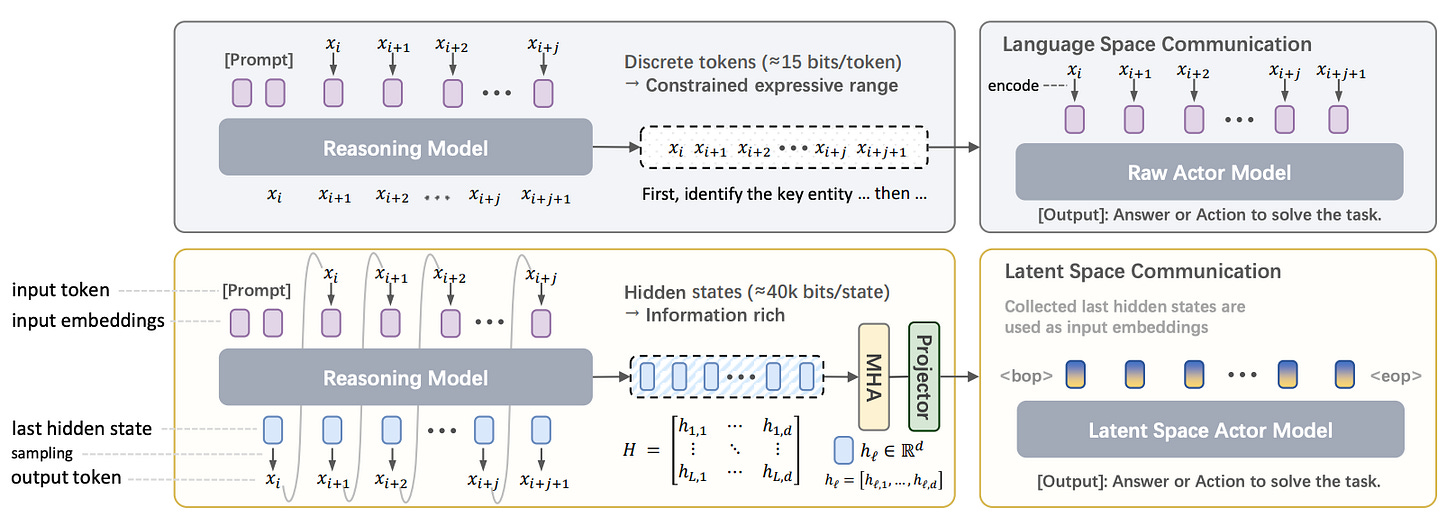

Yet readability disappears again

Earlier I argued that agentic code is not a black box - that it creates “glass boxes” of highly commented, explainable Python, Javascript, etc. That remains true for now. But if you follow the trajectory forward, it doesn’t hold.

As agents begin to interface with other agents, human readability becomes a bottleneck, not a feature. Why output verbose Python when you can exchange compressed logic?

We're already seeing early signs - LLM-optimised languages like NERD (designed to be parseable by LLMs rather than readable by humans) and research on agents that bypass text entirely, communicating through compressed vector representations instead of words (see “Enabling Agents to Communicate Entirely in Latent Space”).

In this deep ocean, the “source code” isn’t a text file you can open in and read. The logic is embedded in high-dimensional vector stores, not if/then statements. The communication happens via learned protocols that optimise for bandwidth, not legibility. The behaviour is routed through weights, not functions.

This eventually creates a new form of opacity. It’s not that the code is “hidden” (proprietary “Closed Source”). It’s that the primary unit of behaviour is no longer human-language.

In the landscape era, “Open Source” meant “Human Readable.” In the ocean era, a system can be entirely “Open” (you have the weights and the vectors) and yet still remain completely alien.

This brings us back to the earlier point - when you cannot read the text of the system, you must rely on reading the behaviour of the system. We move from code review to instrumented observation. In a world where meaning moves through latent channels, “show me the code” is no longer a sufficient accountability mechanism.

(TrustIndex dials: Transparency, Reality)

The rules of security change

In a landscape, software is widely distributed and public facing. SaaS platforms live on the open internet. They have permanent attack surfaces. They must assume hostile traffic. Security becomes a fortress discipline - harden the perimeter, patch continuously, defend a stable surface.

In an ocean, much software becomes scoped to the individual user, more ephemeral, and less discoverable. That changes the incentives for certain classes of outsider attack. Long-lived, widely reachable surfaces are where predators thrive.

But the risks don’t disappear. They concentrate. Identity becomes the perimeter.

Data access becomes the prize. And supply chain ingredients (the libraries, templates, toolchains) become the new shoreline. And this is where a genuinely new secure behaviour appears:

Disposal becomes a security primitive

In a landscape, durability is a virtue. You amortise build cost over time.

In an ocean, persistence is often a liability. The last thing you want is an unused, unmanaged artefact left floating around - an unowned surface area with permissions and dependencies slowly rotting into exploitability. In this ocean, abandoned boats may be a bigger risk than storms. So security shifts away from “make every artefact a fortress” and toward “make the environment non-toxic”. You want defaults that enforce least privilege, isolation, revocation, ingredient monitoring, and narrative traces of what touched what.

The landscape era needed every building to be a fortress.

The ocean era needs the water to be non-toxic.

(TrustIndex dials: Autonomy, Transparency, Reality)

What stays stable - ‘trust’ as orientation

If this all feels like a loss of control, that’s because it is. But it doesn’t have to be a loss of safety. It’s a shift in what safety is made of.

In an ocean of software, safety isn’t achieved by freezing the world into a stable landscape. It is achieved by building constraints that shape, instruments that explain, provenance that persists, and escape hatches that work.

This is the deeper reason the TrustIndex dials exist. Autonomy will run further. Reality will get more mediated. Fidelity will get more convincing.

So the counterweights matter more, not less. Transparency - can you see why it did what it did? Portability - can you leave and take your context with you? Equality - who gets uplift and who gets squeezed?

And in an ocean, you don’t win by controlling the waves. You win by staying oriented as you ride them. The TrustIndex isn't just a measurement - it's your compass. And in an AI-Mediated environment, orientation is the only form of trust we have left.

Join in as we track this slope and stay up-to-date. You can always find the current dashboard at TrustIndex.today and subscribe for regular updates as new Signals arrive, weekly Briefings are published and new Reports are released.