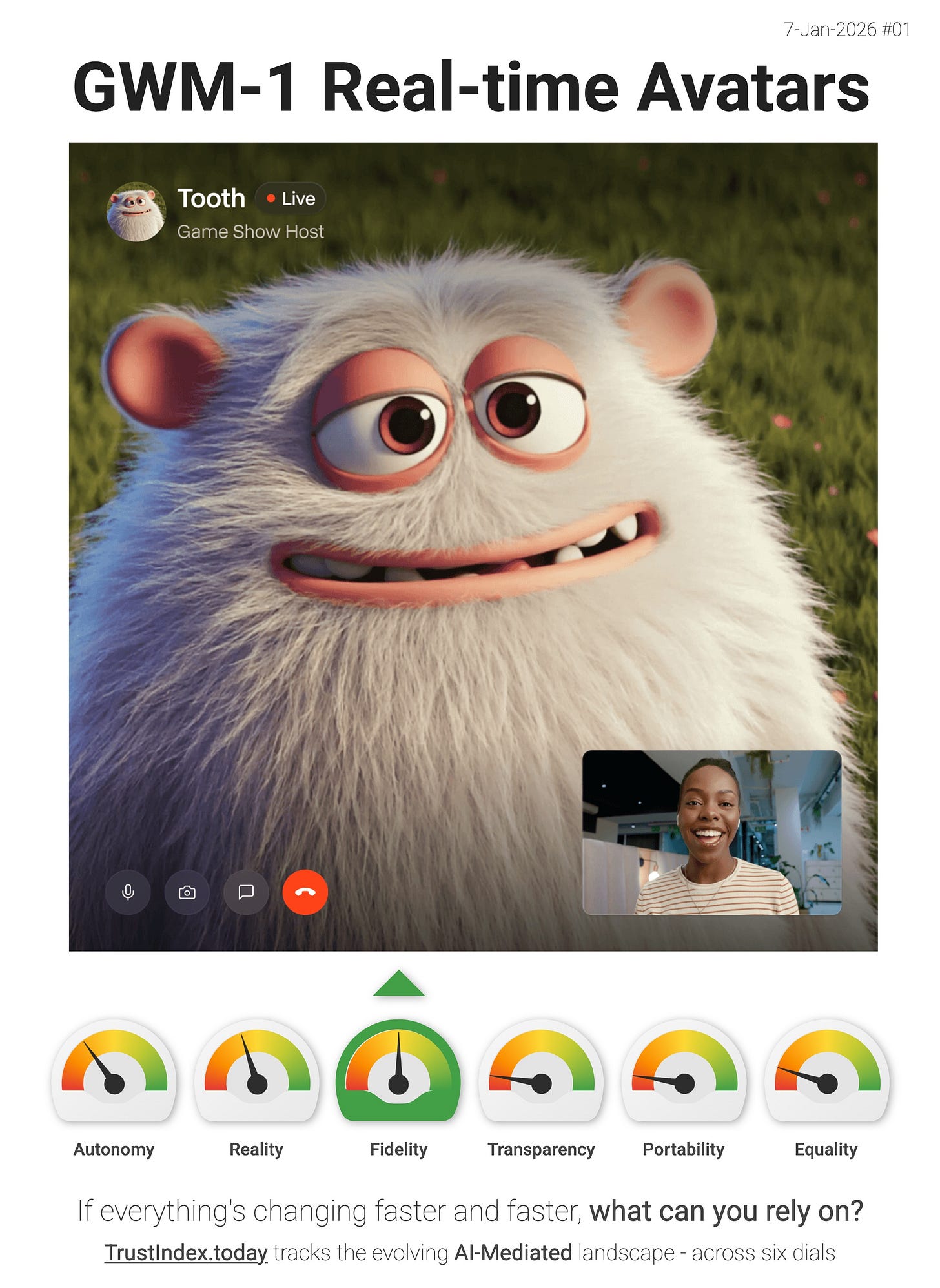

Real-time Audio Driven AI Avatars

Runway's "General World Model" is bringing interactive and real-time AI avatars to life - through their app and through their API.

Runway’s GWM-1 (“General World Model”) is a meaningful Fidelity signal - not just prettier generations, but real-time simulation that can stay coherent as you interact. Runway describes it as “built to simulate reality in real time - interactive, controllable and general-purpose.”

One of the most interesting wedges here are the real-time avatars. Runway says GWM Avatars are an “audio-driven interactive video generation model” that simulates natural human motion and expression for photorealistic or stylized characters - including “realistic facial expressions, eye movements, lip-syncing and gestures,” and crucially can run “for extended conversations without quality degradation”.

That combination (interactive + long-duration + no visible degradation) puts upward pressure on the Fidelity dial (synthetic people that don’t just look convincing in a short clip, but hold up under live interaction) the point where most “wow demos” usually fall apart.

“Bring personalized tutors to life. Responsive characters that explain concepts, react to questions, and hold extended conversations with the natural expressions and gestures that make learning feel like a real dialogue.” - Runway

If this sticks, it accelerates a shift from generated media as “content” to generated media as “presence” (tutors, support agents, hosts, companions) - where the difference-maker is not a single perfect frame, but sustained believability over minutes of conversation.

> The interface layer is thickening. If you disagree with my interpretation, or you’ve spotted a better signal then reply and tell me.