Live AI Translation Between Languages

Google has pushed speech-to-speech translation further into the flow of everyday life

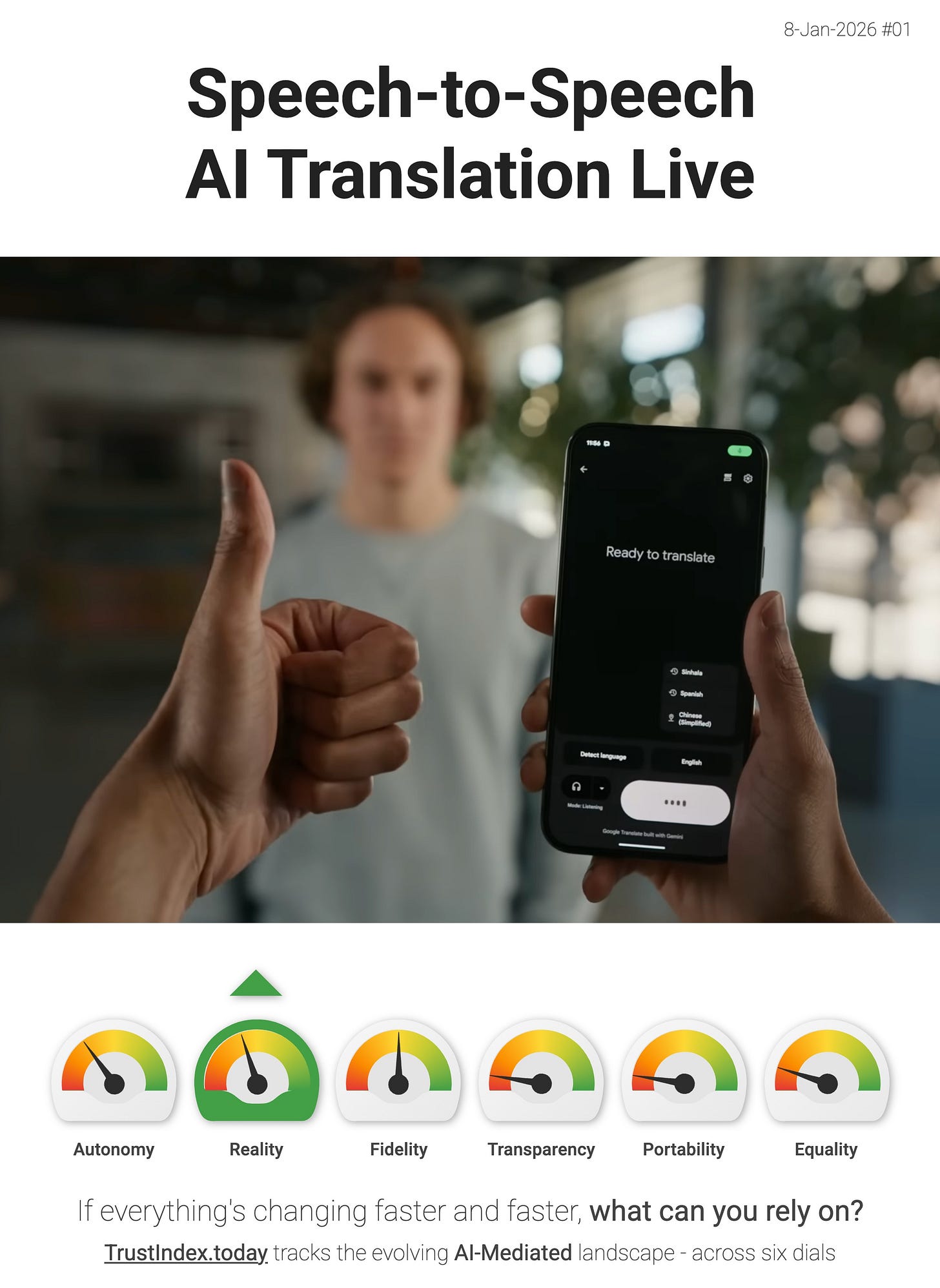

Google Translate is rolling out a beta that lets you hear real-time translations in your headphones, powered by Gemini’s native speech-to-speech translation capabilities.

This is upward pressure on the Reality dial. When translation moves from “a thing you do” to “a layer you wear”, the AI starts sitting “between you and other people’s conversations” - filtering, re-timing, and re-voicing what you perceive in real time. Google explicitly claims the experience works to preserve “tone, emphasis and cadence”, making the mediated layer easier to accept as the default interface for live interaction.

"...get a more natural, accurate translation, instead of a literal word-for-word translation. Gemini parses the context to give you a helpful translation that captures what the idiom really means." - Google

It’s also a scale signal - Google says it works with any pair of headphones, supports 70+ languages, and is rolling out on Android in the US/Mexico/India (with more coming). If this becomes normal, “raw audio” becomes optional - and cross-language conversation becomes another domain where reality is increasingly “AI-shaped by default”.

> The interface layer is thickening. If you disagree with my interpretation, or you’ve spotted a better signal then reply and tell me.