Control A Robot Dog & Work Using Your Brain

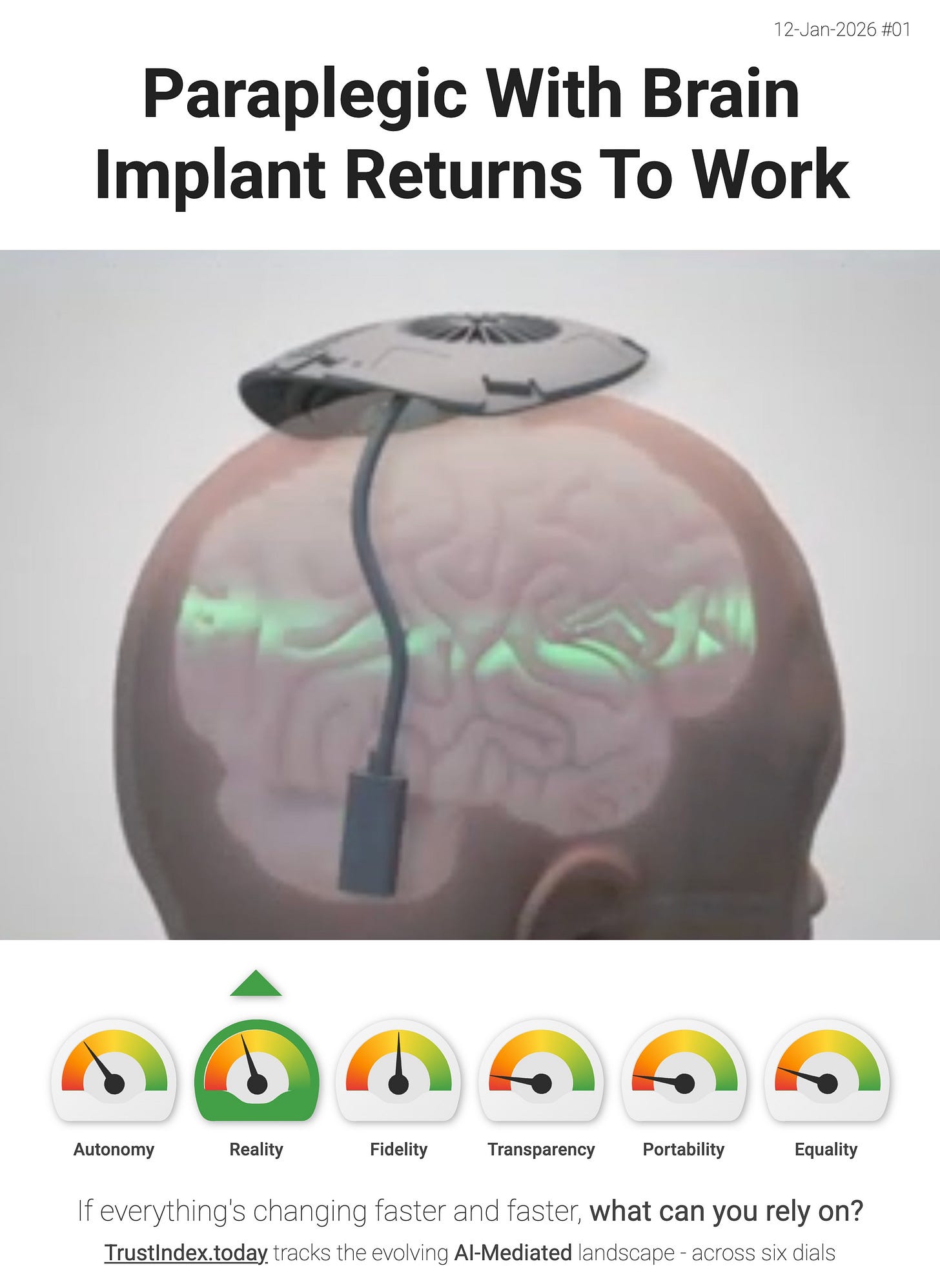

A paraplegic patient is now using an invasive, wireless brain–computer interface to control physical devices in everyday settings.

A real Reality dial signal out of China - a paraplegic patient is now using an invasive, wireless brain–computer interface to control physical devices in everyday settings. Not in a lab demo, but in “real-life scenarios”.

China’s Academy of Sciences reports its researchers completed a second invasive BCI clinical trial, using a high-throughput wireless system (WRS01) that let a patient stably control a smart wheelchair and a robot dog via brain signals, including autonomous movement and object retrieval.

The details matter because they describe the “plumbing” required for reality-mediation to feel natural - performance improvements of ~15–20% from compression + decoding techniques, and end-to-end latency under 100ms from signal capture to command execution. That’s fast enough to feel smooth rather than “remote controlled”. They also report the system can be recalibrated online during daily use (“gets smoother the more you use it”), and that control becomes more “internalized” as skill increases.

“In the process of industrializing the technology, the team adopted a systematic approach, using neural interface electrodes as a foundation to gradually build a technical system that integrates systems, optimizes algorithms, and expands application scenarios.”

- China Academy of Sciences

And it’s not just mobility. The team describes broader social integration - they worked with local disability orgs to help the patient participate in online activities so he could return to work - a small but telling example of how “digital agency” can re-enter the physical world through a mediated interface. The next iteration (WRS02) is already planned with 256 channels.

> The interface layer is thickening. If you disagree with my interpretation, or you’ve spotted a better signal then reply and tell me.