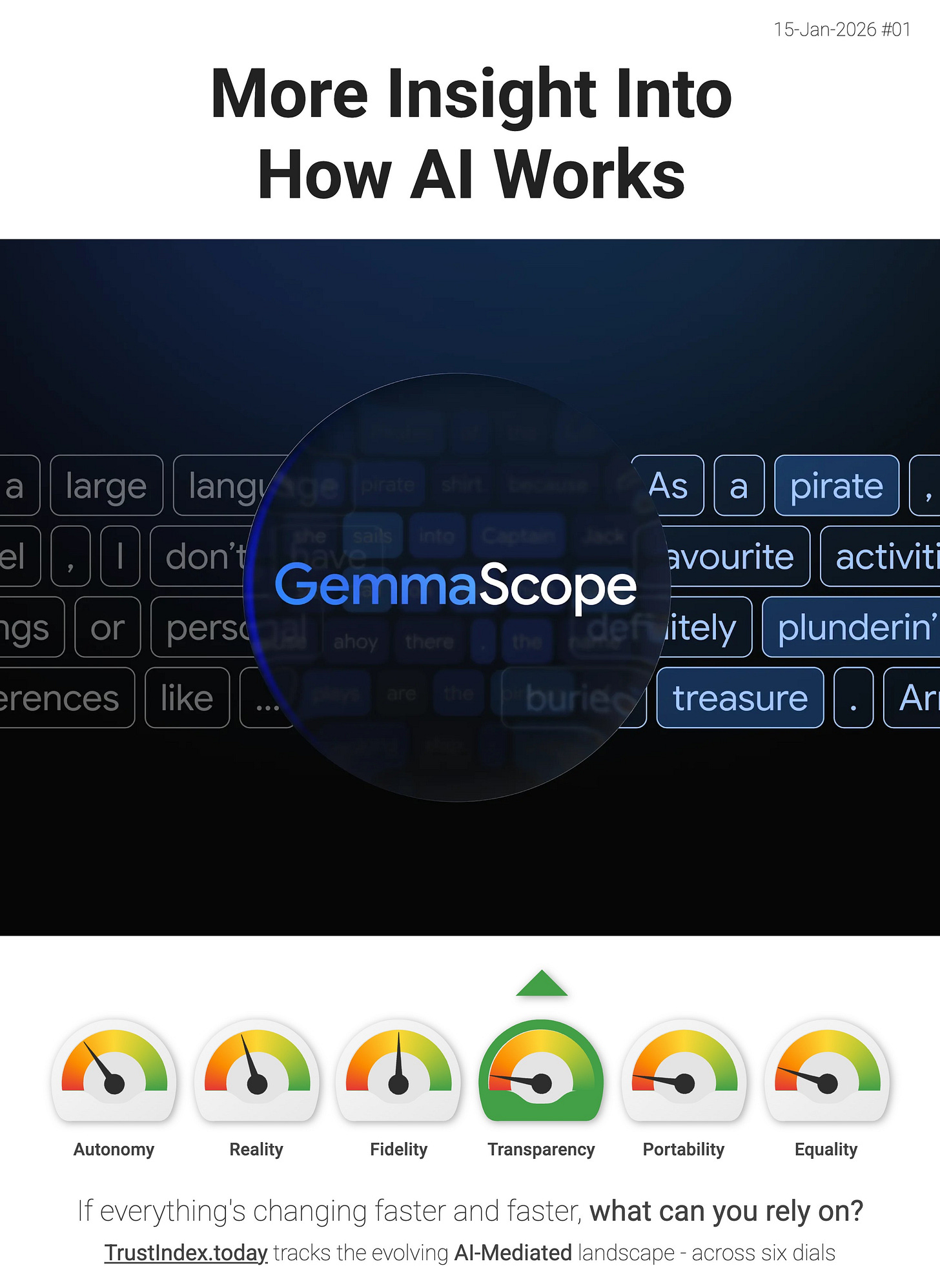

An Open Interpretability Suite

Gemma Scope 2 is another step towards helping researchers understand and debug internal behaviours tied to safety issues like hallucinations, jailbreaks, and sycophancy.

Google DeepMind’s Gemma Scope 2 is a clear Transparency signal - not a mainstream consumer feature, but meaningful pressure toward making model behaviour inspectable instead of “black box vibes”.

DeepMind describes Gemma Scope 2 as an open interpretability suite for the Gemma 3 family (from 270M to 27B parameters) - meant to help researchers understand and debug internal behaviours tied to safety issues like hallucinations, jailbreaks, and sycophancy.

Mechanistically, it’s a “microscope” built from sparse autoencoders (SAEs) and transcoders trained on every layer of Gemma 3, with an interactive demo via Neuronpedia and downloadable artifacts on Hugging Face.

“These tools can enable us to trace potential risks across the entire ‘brain’ of the model. To our knowledge, this is the largest ever open-source release of interpretability tools by an AI lab to date.” - Google Deepmind

That’s upward pressure on the Transparency dial - more tooling for provenance-like understanding of why a model is behaving a certain way, where problematic behaviour is represented internally, and what changes might mitigate it. It’s limited (Gemma 3 only - mostly for researchers today), but it nudges the ecosystem toward auditability and verifiability. The foundations for trustworthy AI-mediated interfaces at scale.

> The interface layer is thickening. If you disagree with my interpretation, or you’ve spotted a better signal then reply and tell me.